2 Simulating Differential Abundance

Before we consider simulating entire microbial communities, with their complex correlation structures, let’s learn simulators for individual taxa. This is already enough to analyze taxon-level differential abundance approaches. For example, at the end of this session, we’ll apply a simulator to study the power and false discovery rate of limma-voom when applied to microbiome data (as opposed to the bulk RNA-seq data for which it was originally proposed). Also, marginal modeling is a first step towards multivariate (community-wide) modeling, which we’ll explore in the next session.

Let’s load the necessary packages. Instructions for scDesigner and MIGsim

can be found in the pre-workshop announcement. SummarizedExperiment is on

Bioconductor, and all other packages are on CRAN.

Let’s train a simulator to fit the Atlas dataset. We’ll use bmi_group as the

covariate of interest – we want to see how microbiome composition varies among

people with different BMI. We found it helpful

to fixed zero inflation across the population (nu), so we have set nu = ~1.

Finally, since we want to eventually evaluate testing methods that are designed

for count data, we have used the (Z)ero (I)nflated (N)egative (B)inomial

location-shape-scale model.

data(atlas1006, package = "microbiome")

atlas <- convertFromPhyloseq(atlas1006) |>

filter(time == 0) |>

dplyr::mutate(

bmi_group = case_when(

str_detect(bmi_group, "obese") ~ "obese",

bmi_group == "lean" ~ "lean",

bmi_group == "overweight" ~ "overweight",

)

) |>

dplyr::filter(bmi_group %in% c("obese", "lean", "overweight")) |>

dplyr::mutate(

bmi_group = factor(bmi_group, levels = c("obese", "overweight", "lean"))

)

fmla <- list(mu = ~bmi_group, sigma = ~bmi_group, nu = ~1)We’ll remove the genera which are never observed in this dataset.

colData(atlas)$log_depth <- log(colSums(assay(atlas)))

zero_var <- apply(assay(atlas), 1, var) == 0

atlas <- atlas[!zero_var, ]Next we will select features that could work with ZINBF() estimation.

select_i <- c(1:2)

colData(atlas) <- colData(atlas)[, c("bmi_group", "sample")]

for (i in 3:nrow(atlas)) {

#print(glue("{i}/{dim(atlas)[1]}"))

exper_tmp <- atlas[c(select_i, i), ]

sim <- setup_simulator(exper_tmp, fmla, ~ ZINBF())

error_warning <- estimate(

sim,

control = gamlss.control(n.cyc = 20, mu.step = 0.1)

) |>

tryCatch(warning = \(w) w, error = \(e) e)

if (is.na(class(error_warning)[2])) {

select_i <- c(select_i, i)

}

}

colData(atlas) <- colData(atlas)[, c("bmi_group", "sample")]

atlas_filtered <- atlas[select_i, ]

sim <- setup_simulator(atlas_filtered, fmla, ~ ZINBF()) |>

estimate(control = gamlss.control(n.cyc = 20, mu.step = 0.1))2.1 Critique

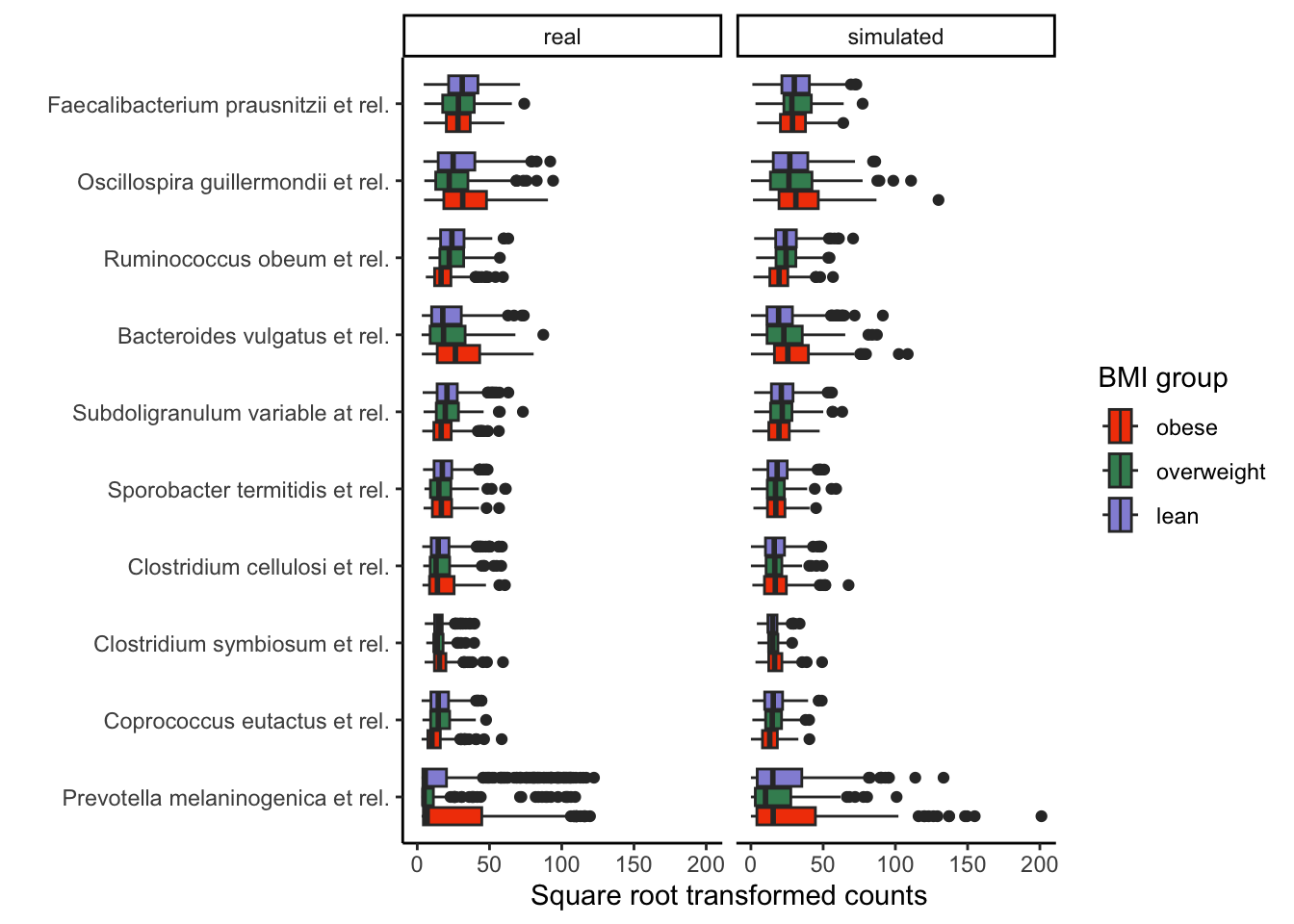

Exercise: The block below combines the real and simulated experiments and visualizes their difference both graphically and quantitatively. With your neighbors, discuss how well the simulator approximates the original template.

combined <- bind_rows(

real = pivot_experiment(atlas_filtered), # real data

simulated = pivot_experiment(sample(sim)), # simulated

.id = "source"

)

top_features <- assay(atlas_filtered) |>

rowMeans() |>

sort() |>

tail(10) |>

names()

combined |>

filter(feature %in% top_features) |>

ggplot() +

geom_boxplot(

aes(sqrt(value), reorder(feature, value, median), fill = bmi_group)

) +

facet_grid(. ~ source) +

labs(x = "Square root transformed counts", y = "", fill = "BMI group") +

theme(legend.position = "right") +

scale_fill_manual(values = rev(c("#9491D9", "#3F8C61", "#F24405")))

kdetest.group <- function(data) {

x <- data |> filter(source == "real")

y <- data |> filter(source == "simulated")

kde.test(x$value, y$value)$pvalue

}

prop.accepted.H0 <- sapply(

c("lean", "overweight", "obese"),

\(group) {

group_filter <- map(

unique(combined$feature),

~ combined |>

filter(feature == . & bmi_group == group)

)

adjpvalue <- sapply(

seq_along(select_i),

\(i) tryCatch(kdetest.group(group_filter[[i]]), error = \(e) NA)

) |>

p.adjust(method = "BH")

mean(adjpvalue > 0.05, na.rm = TRUE)

}

)

prop.accepted.H0## lean overweight obese

## 0.7441860 0.7857143 0.7380952Solution: The simulated data are generally similar to the original data. However, for some genera with positively skewed distributions and a high number of outliers, such as Prevotella melaninogenica et rel, the simulation reached its limits, explaining why the KS two-sample test returned significant differences between the real and simulated data for some genera. Nonetheless, the ordering of abundances between the groups typically agrees between the real and simulated data. The interquartile ranges for each taxon also seem to roughly match.

2.2 Power Analysis Loop

To run a power analysis, we need to define datasets that have known ground

truth. Then, we can run any differential abundance methods we want and see how

many of the true associations are recovered (and how many nulls are falsely

rejected). To this end, we’ll remove associations from genera based on Wilcoxon

Rank Sum test. We’ll choose to remove the genera that have a q valuse<0.1

in the original data.This is helpful because, even if we use bmi_group in our

formula, if in reality there is no (or very weak) effect, then even if our

simulator considers it as a true signal, the difference may be hard to detect.

Eventually, our package will include functions for modifying these effects

directly; at this point, though, we can only indirectly modify parameters by

re-estimating them with new formulas.

wilcox_res <- differential_analysis(atlas_filtered, "wilcox-clr")

nulls <- rownames(wilcox_res[wilcox_res$q_value.bmi_group. > 0.1, ])

sim <- sim |> scDesigner::mutate(any_of(nulls), link = ~1)

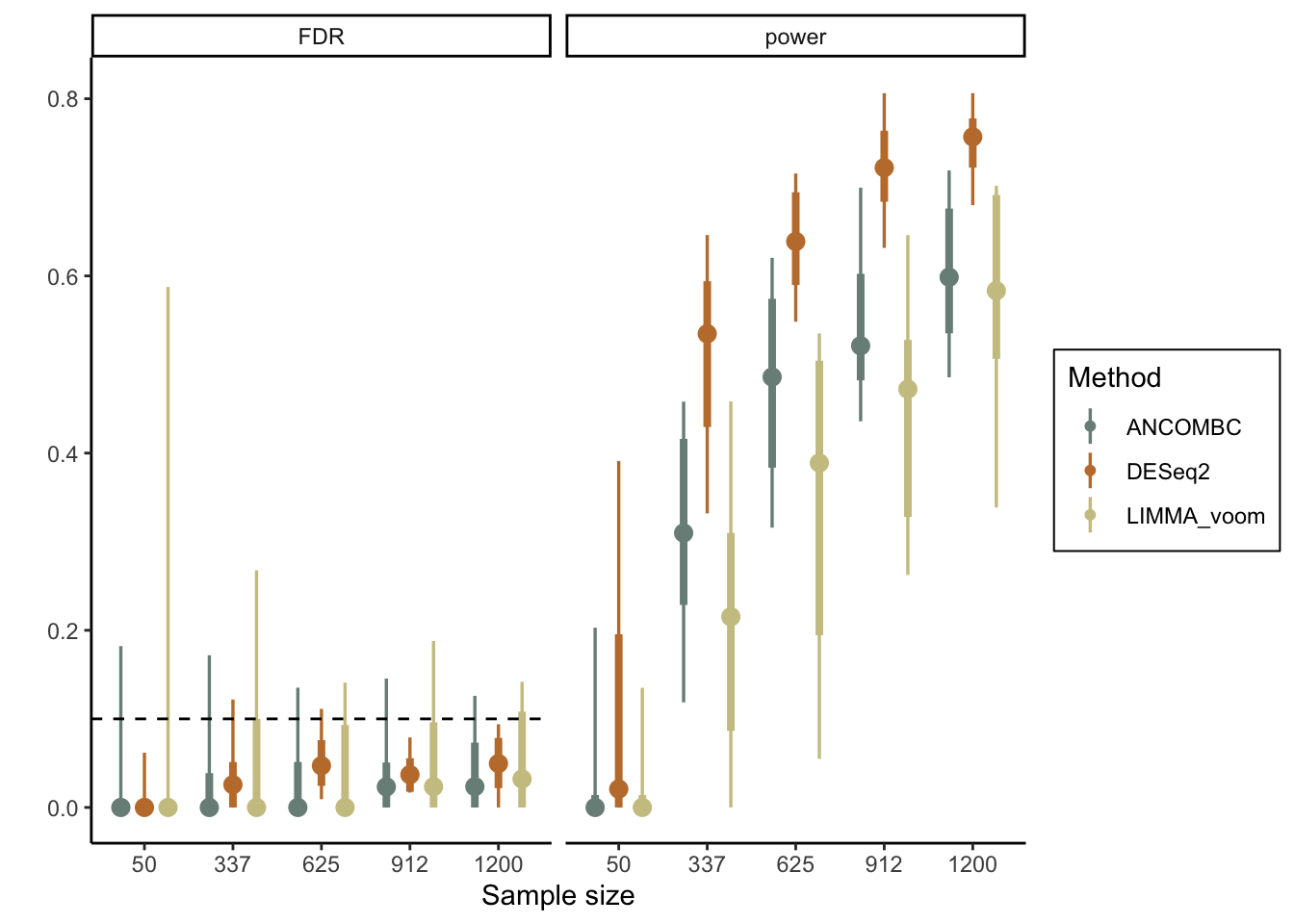

sim <- estimate(sim, control = gamlss.control(n.cyc = 20, mu.step = 0.1))Now that we have ground truth associations, we’ll evaluate LIMMA-voom, ANCOMBC, DESeq2 for differential analysis. We consider sample sizes ranging from 50 to 1200, and we simulate 20 datasets for each sample size.

config <- expand.grid(

sample_size = floor(seq(50, 1200, length.out = 5)),

n_rep = 1:20

) |>

mutate(run = as.character(row_number()))

results <- list()

for (i in seq_len(nrow(config))) {

atlas_ <- sample_n_(sim, config$sample_size[i])

colnames(atlas_) <- glue("Sample-{seq_len(config$sample_size[i])}")

results[[i]] <- sapply(c("LIMMA_voom", "ANCOMBC", "DESeq2"),

\(m) {

differential_analysis(atlas_, m) |>

da_metrics(nulls)

},

simplify = FALSE

)

#print(glue("{i}/{nrow(config)}"))

}Exercise: Visualize the results. How would you interpret the results of the power analysis? Based on your earlier critique of the simulator, do you think the estimated power here is conservative, liberal, or about right?

Solution: We’ll use the stat_pointinterval function from the ggdist package to

visualize the range of empirical power estimates across sample sizes. We can see

that the average false discovery proportion is always controlled below 0.1,

though the variance in this proportion can be high. We can also see that

we would have quite good power with \(n \geq 625\) samples, but the worst case

scenarios can be quite poor for anything with fewer samples.

power_fdr <- map_dfr(results, ~ bind_rows(., .id = "method"), .id = "run") |>

dplyr::mutate(sample_size = rep(config$sample_size, each = 6))

ggplot(power_fdr) +

tidybayes::stat_pointinterval(aes(factor(sample_size), value, group = method, colour = method),

position = position_dodge(width = 0.8)

) +

geom_hline(aes(yintercept = 0.1, alpha = metric), linetype = 2) +

facet_wrap(~metric) +

labs(x = "Sample size", y = "", color = "Method") +

scale_color_manual(values = wes_palette("Moonrise2", n = 3)) +

scale_alpha_discrete(guide = "none", range = c(1, 0)) +

theme(legend.position = "right", legend.box.background = element_rect(colour = "black"))

## R version 4.4.1 Patched (2024-08-21 r87049)

## Platform: aarch64-apple-darwin20

## Running under: macOS Sonoma 14.5

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## time zone: America/Chicago

## tzcode source: internal

##

## attached base packages:

## [1] splines parallel stats4 stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] doRNG_1.8.6 rngtools_1.5.2 foreach_1.5.2

## [4] ggpubr_0.6.0 ggrepel_0.9.6 edgeR_4.3.16

## [7] limma_3.61.10 ANCOMBC_2.7.0 stringr_1.5.1

## [10] ks_1.14.3 tidySummarizedExperiment_1.15.1 ttservice_0.4.1

## [13] SimBench_0.99.1 wesanderson_0.3.7 mia_1.13.36

## [16] MultiAssayExperiment_1.31.5 TreeSummarizedExperiment_2.13.0 Biostrings_2.73.1

## [19] XVector_0.45.0 SingleCellExperiment_1.27.2 gamlss_5.4-22

## [22] nlme_3.1-166 gamlss.dist_6.1-1 gamlss.data_6.0-6

## [25] scDesigner_0.0.0.9000 purrr_1.0.2 MIGsim_0.0.0.9000

## [28] tidyr_1.3.1 tibble_3.2.1 scico_1.5.0

## [31] pwr_1.3-0 patchwork_1.3.0 mutoss_0.1-13

## [34] mvtnorm_1.3-1 mixOmics_6.29.0 lattice_0.22-6

## [37] MASS_7.3-61 glue_1.8.0 ggplot2_3.5.1

## [40] ggdist_3.3.2 gamboostLSS_2.0-7 mboost_2.9-11

## [43] stabs_0.6-4 forcats_1.0.0 dplyr_1.1.4

## [46] SummarizedExperiment_1.35.1 Biobase_2.65.1 GenomicRanges_1.57.1

## [49] GenomeInfoDb_1.41.1 IRanges_2.39.2 S4Vectors_0.43.2

## [52] BiocGenerics_0.51.1 MatrixGenerics_1.17.0 matrixStats_1.4.1

## [55] SpiecEasi_1.1.3 CovTools_0.5.4

##

## loaded via a namespace (and not attached):

## [1] igraph_2.0.3 ica_1.0-3 plotly_4.10.4

## [4] Formula_1.2-5 scater_1.33.4 zlibbioc_1.51.1

## [7] tidyselect_1.2.1 bit_4.5.0 doParallel_1.0.17

## [10] pspline_1.0-20 blob_1.2.4 S4Arrays_1.5.8

## [13] png_0.1-8 plotrix_3.8-4 cli_3.6.3

## [16] CVXR_1.0-14 pulsar_0.3.11 arrayhelpers_1.1-0

## [19] multtest_2.61.0 goftest_1.2-3 textshaping_0.4.0

## [22] bluster_1.15.1 BiocNeighbors_1.99.1 uwot_0.2.2

## [25] mime_0.12 evaluate_1.0.0 tidytree_0.4.6

## [28] leiden_0.4.3.1 stringi_1.8.4 backports_1.5.0

## [31] lmerTest_3.1-3 Exact_3.3 gsl_2.1-8

## [34] httpuv_1.6.15 AnnotationDbi_1.67.0 magrittr_2.0.3

## [37] mclust_6.1.1 ADGofTest_0.3 pcaPP_2.0-5

## [40] rootSolve_1.8.2.4 sctransform_0.4.1 lpSolve_5.6.21

## [43] ggbeeswarm_0.7.2 DBI_1.2.3 jquerylib_0.1.4

## [46] withr_3.0.1 corpcor_1.6.10 systemfonts_1.1.0

## [49] class_7.3-22 lmtest_0.9-40 compositions_2.0-8

## [52] htmlwidgets_1.6.4 fs_1.6.4 labeling_0.4.3

## [55] SparseArray_1.5.38 DEoptimR_1.1-3 cellranger_1.1.0

## [58] DESeq2_1.45.3 tidybayes_3.0.7 extrafont_0.19

## [61] lmom_3.0 flare_1.7.0.1 reticulate_1.39.0

## [64] minpack.lm_1.2-4 geigen_2.3 zoo_1.8-12

## [67] knitr_1.48 nnls_1.5 UCSC.utils_1.1.0

## [70] svUnit_1.0.6 SHT_0.1.8 decontam_1.25.0

## [73] fansi_1.0.6 grid_4.4.1 rhdf5_2.49.0

## [76] data.table_1.16.0 vegan_2.6-8 RSpectra_0.16-2

## [79] irlba_2.3.5.1 extrafontdb_1.0 DescTools_0.99.56

## [82] ellipsis_0.3.2 fastDummies_1.7.4 ade4_1.7-22

## [85] lazyeval_0.2.2 yaml_2.3.10 survival_3.7-0

## [88] scattermore_1.2 crayon_1.5.3 mediation_4.5.0

## [91] tensorA_0.36.2.1 phyloseq_1.49.0 RcppAnnoy_0.0.22

## [94] RColorBrewer_1.1-3 progressr_0.14.0 later_1.3.2

## [97] ggridges_0.5.6 codetools_0.2-20 base64enc_0.1-3

## [100] Seurat_5.1.0 KEGGREST_1.45.1 Rtsne_0.17

## [103] shape_1.4.6.1 randtoolbox_2.0.4 foreign_0.8-87

## [106] pkgconfig_2.0.3 xml2_1.3.6 spatstat.univar_3.0-1

## [109] downlit_0.4.4 scatterplot3d_0.3-44 spatstat.sparse_3.1-0

## [112] ape_5.8 viridisLite_0.4.2 xtable_1.8-4

## [115] highr_0.11 car_3.1-2 fastcluster_1.2.6

## [118] plyr_1.8.9 httr_1.4.7 rbibutils_2.2.16

## [121] tools_4.4.1 globals_0.16.3 SeuratObject_5.0.2

## [124] kde1d_1.0.7 beeswarm_0.4.0 htmlTable_2.4.3

## [127] broom_1.0.6 checkmate_2.3.2 rgl_1.3.1

## [130] assertthat_0.2.1 lme4_1.1-35.5 rngWELL_0.10-9

## [133] digest_0.6.37 bayesm_3.1-6 permute_0.9-7

## [136] numDeriv_2016.8-1.1 bookdown_0.40 Matrix_1.7-0

## [139] farver_2.1.2 reshape2_1.4.4 yulab.utils_0.1.7

## [142] viridis_0.6.5 DirichletMultinomial_1.47.0 rpart_4.1.23

## [145] cachem_1.1.0 polyclip_1.10-7 WGCNA_1.73

## [148] Hmisc_5.1-3 generics_0.1.3 dynamicTreeCut_1.63-1

## [151] parallelly_1.38.0 biomformat_1.33.0 statmod_1.5.0

## [154] impute_1.79.0 huge_1.3.5 ragg_1.3.3

## [157] RcppHNSW_0.6.0 ScaledMatrix_1.13.0 carData_3.0-5

## [160] minqa_1.2.8 pbapply_1.7-2 glmnet_4.1-8

## [163] spam_2.10-0 utf8_1.2.4 gtools_3.9.5

## [166] shapes_1.2.7 readxl_1.4.3 preprocessCore_1.67.0

## [169] inum_1.0-5 ggsignif_0.6.4 energy_1.7-12

## [172] gridExtra_2.3 shiny_1.9.1 GenomeInfoDbData_1.2.12

## [175] rhdf5filters_1.17.0 memoise_2.0.1 rmarkdown_2.28

## [178] scales_1.3.0 gld_2.6.6 stabledist_0.7-2

## [181] partykit_1.2-22 future_1.34.0 RANN_2.6.2

## [184] spatstat.data_3.1-2 rstudioapi_0.16.0 cluster_2.1.6

## [187] spatstat.utils_3.1-0 hms_1.1.3 fitdistrplus_1.2-1

## [190] munsell_0.5.1 cowplot_1.1.3 colorspace_2.1-1

## [193] ellipse_0.5.0 rlang_1.1.4 quadprog_1.5-8

## [196] copula_1.1-4 DelayedMatrixStats_1.27.3 sparseMatrixStats_1.17.2

## [199] dotCall64_1.1-1 scuttle_1.15.4 mgcv_1.9-1

## [202] xfun_0.47 coda_0.19-4.1 e1071_1.7-16

## [205] TH.data_1.1-2 posterior_1.6.0 iterators_1.0.14

## [208] rARPACK_0.11-0 abind_1.4-8 libcoin_1.0-10

## [211] treeio_1.29.1 gmp_0.7-5 Rhdf5lib_1.27.0

## [214] DECIPHER_3.1.4 Rdpack_2.6.1 promises_1.3.0

## [217] RSQLite_2.3.7 sandwich_3.1-1 proxy_0.4-27

## [220] DelayedArray_0.31.11 Rmpfr_0.9-5 GO.db_3.20.0

## [223] compiler_4.4.1 prettyunits_1.2.0 boot_1.3-31

## [226] distributional_0.5.0 beachmat_2.21.6 rbiom_1.0.3

## [229] listenv_0.9.1 Rcpp_1.0.13 Rttf2pt1_1.3.12

## [232] BiocSingular_1.21.4 tensor_1.5 progress_1.2.3

## [235] BiocParallel_1.39.0 insight_0.20.5 spatstat.random_3.3-2

## [238] R6_2.5.1 fastmap_1.2.0 multcomp_1.4-26

## [241] rstatix_0.7.2 vipor_0.4.7 ROCR_1.0-11

## [244] rsvd_1.0.5 nnet_7.3-19 gtable_0.3.5

## [247] KernSmooth_2.23-24 miniUI_0.1.1.1 deldir_2.0-4

## [250] htmltools_0.5.8.1 ggthemes_5.1.0 RcppParallel_5.1.9

## [253] bit64_4.5.2 spatstat.explore_3.3-2 lifecycle_1.0.4

## [256] nloptr_2.1.1 sass_0.4.9 vctrs_0.6.5

## [259] robustbase_0.99-4 VGAM_1.1-12 slam_0.1-53

## [262] spatstat.geom_3.3-3 sp_2.1-4 future.apply_1.11.2

## [265] pracma_2.4.4 rvinecopulib_0.6.3.1.1 bslib_0.8.0

## [268] pillar_1.9.0 locfit_1.5-9.10 jsonlite_1.8.9

## [271] expm_1.0-0