3 Multivariate Power Analysis

How can we choose sample sizes in more complex bioinformatic workflows, where we simultaneously analyze many features (taxa, genes, metabolites) in concert? While traditional, analytical power analysis often breaks down, simulation can still be effective. We’ll look at a concrete case study where we try to choose a good sample size for a sparse partial least squares discriminant analysis (sPLS-DA) of the Type I Diabetes (T1D) gut microbiome.

First, we’ll load the required packages. Relative to our first session, the only

additional package that we need is mixOmics. This can be installed using

BiocManager::install('mixOmics').

3.1 Interpreting PLS-DA

The T1D dataset below describes 427 metabolites from the gut microbiomes of 40 T1D patients and 61 healthy controls. These data were originally gathered by Gavin et al. (2018), who were motivated by the relationship between the pancreas and intestinal issues often experienced by T1D patients. They were especially curious about whether microbime-associated proteins might be related to increased risk for T1D development.

data(t1d)The output that we care about are the PLD-DA scores and loadings. The wrapper below directly gives us this output without our having to explicitly set hyerparameters, though you can look here to see how the function was defined.

result <- splsda_fit(t1d)

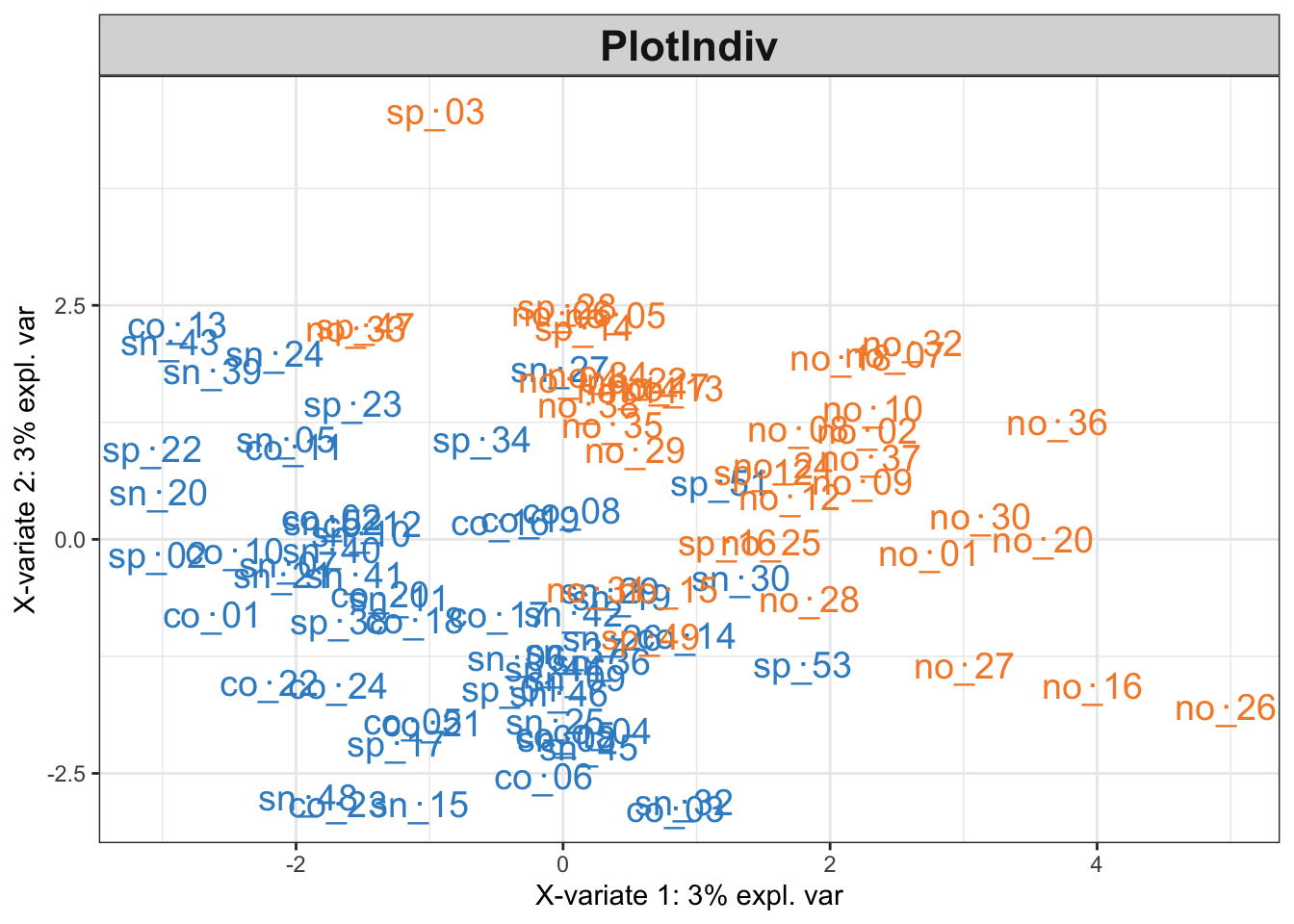

plotIndiv(result$fit, cex = 5)

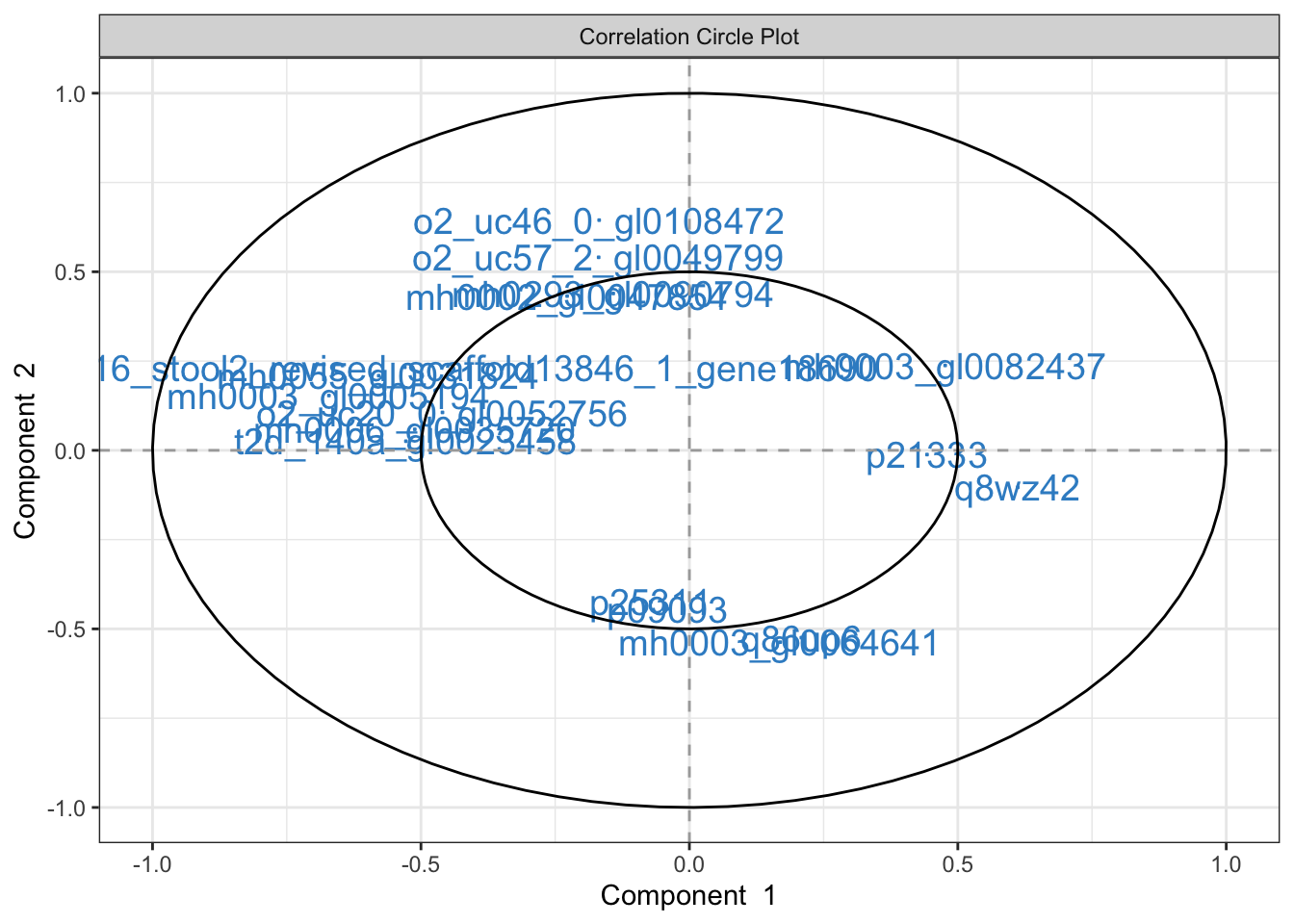

plotVar(result$fit, cutoff = 0.4, cex = 5)

Exercise: Discuss the output of plotIndiv. How does plotVar shape

your interpretation?

Solution: The first two dimensions of the sPLS-DA pick on distinctions between the T1D (orange) and control (blue) groups. The variance by these first two dimensions is relatively small – variation in protein signatures may not be easiliy captured in two dimensions or may not generally be correlated with the disease response. Since most of the differences between groups are captured by the first dimension, we can look for features that are highly correlated with this dimension to see which proteins might be driving the difference. For example, we can conclude that T1D samples tend to have higher levels of p21333 and q8wz42 compared to the controls.

3.2 Estimation

Let’s estimate a simulator where every protein is allowed ot vary across T1D type. Since the data have already been centered log-ratio transformed, it’s okay to treat these as Gaussian.

simulator <- setup_simulator(t1d, link_formula = ~outcome2, family = ~ GaussianLSS()) |>

estimate(mstop = 100)3.3 Evaluation

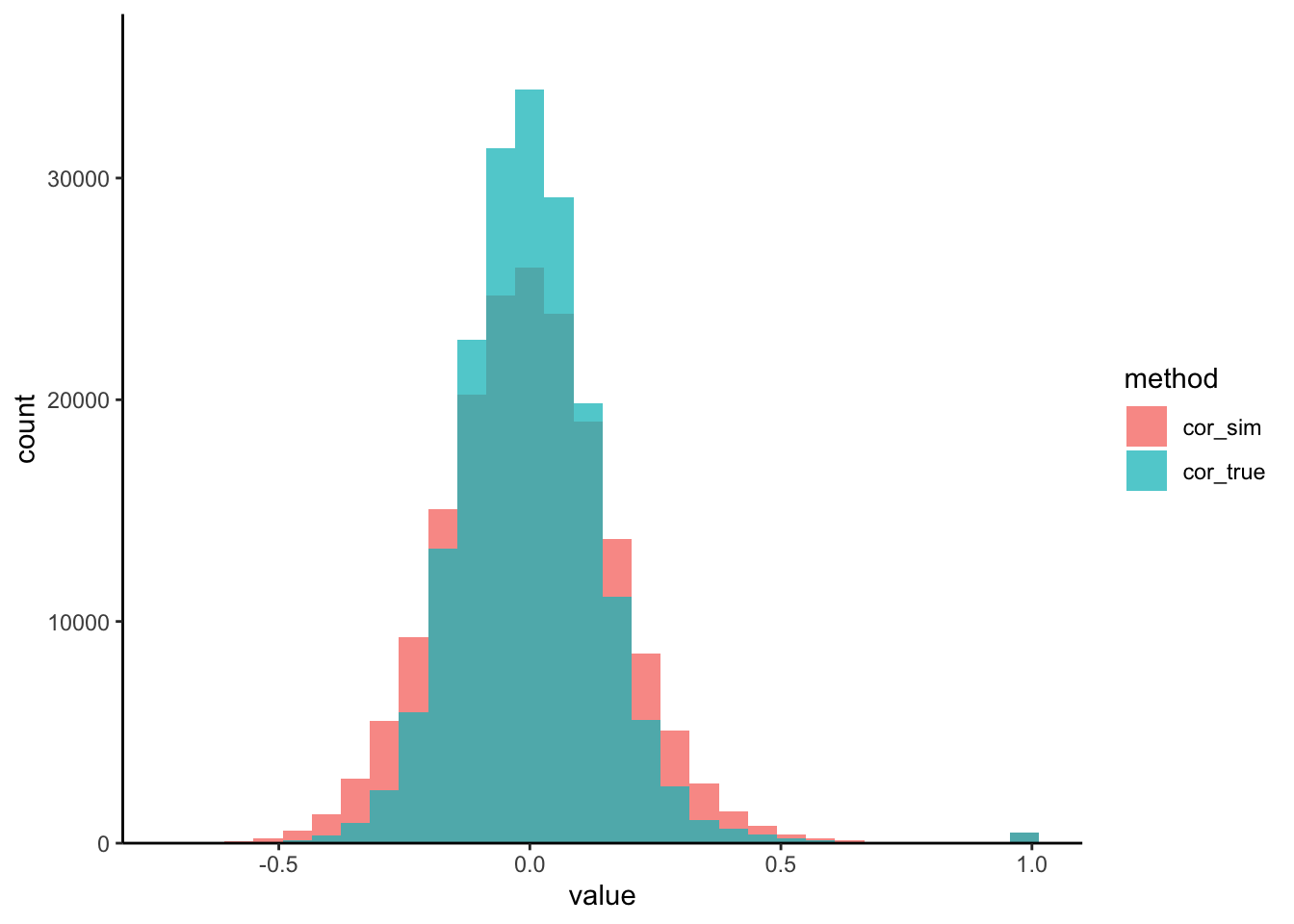

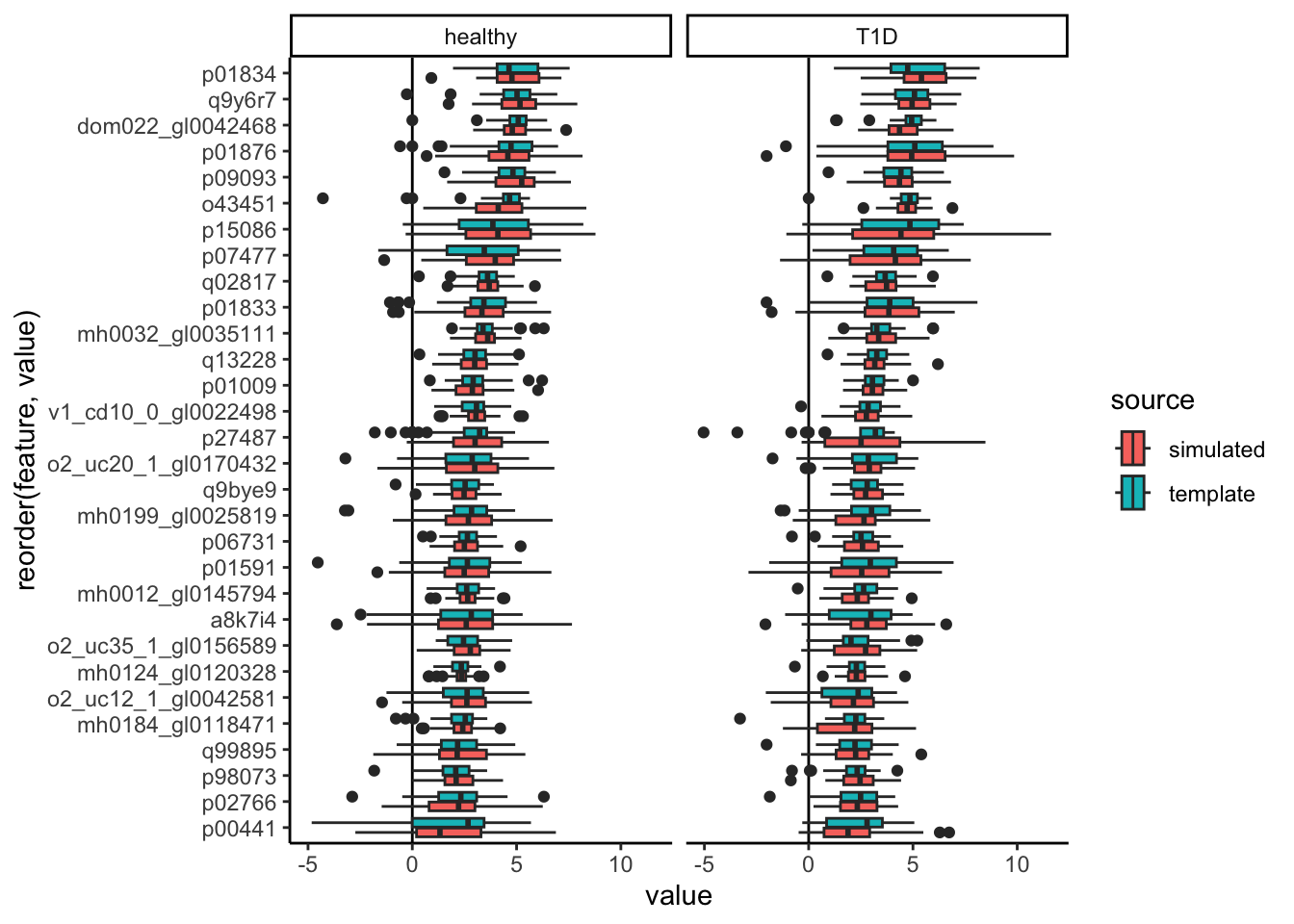

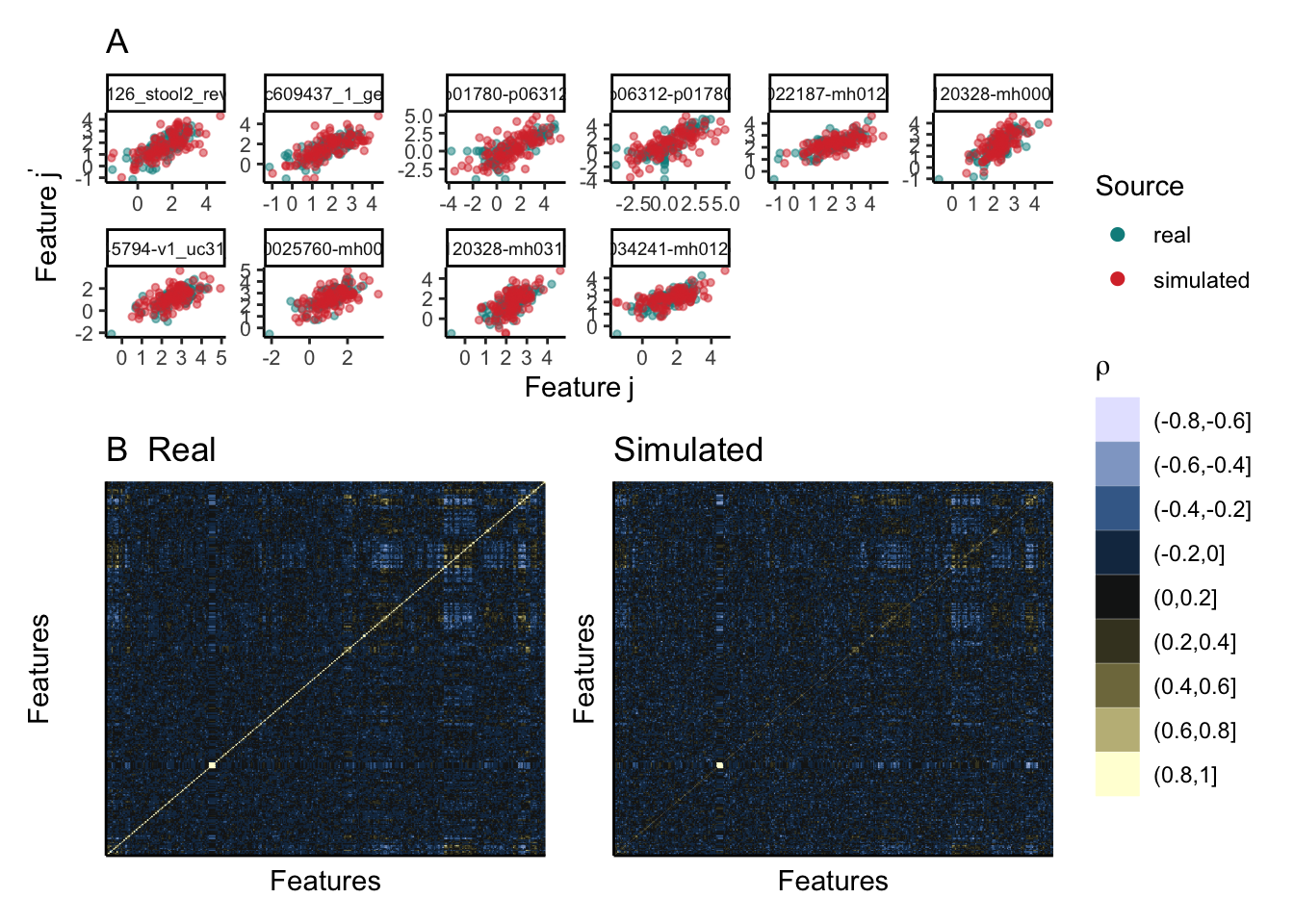

Last time, we saw how we could visualize marginal simulator quality. How can we tell whether a joint simulator is working, though? One simple check is to analyze the pairwise correlations. Since the copula model is designed to capture second-order moments, it should at the very least effectively capture the correlations.

We’ve written a small helper that visualizes the pairwise protein-protein correlations from both the real and the simulated datasets. We seem to be often overestimating the correlation strength. This is likely a consequence of the high-dimensionality of the problem.

sim_exper <- sample(simulator)

correlation_hist(t1d, sim_exper)

Exercise: To address this, let’s try modifying the copula_def argument of

setup_simulator to use a more suitable simulator. Generate new correlation

histograms and comment on the changes you observe. You only need to modify the

commented lines (#) lines in the block below.

simulator <- setup_simulator(

t1d,

link_formula = ~outcome2,

family = ~ GaussianLSS(),

copula_def = # fill this code in

) |>

estimate(mstop = 100)

sim_exper <- sample(simulator) # and then run these two lines

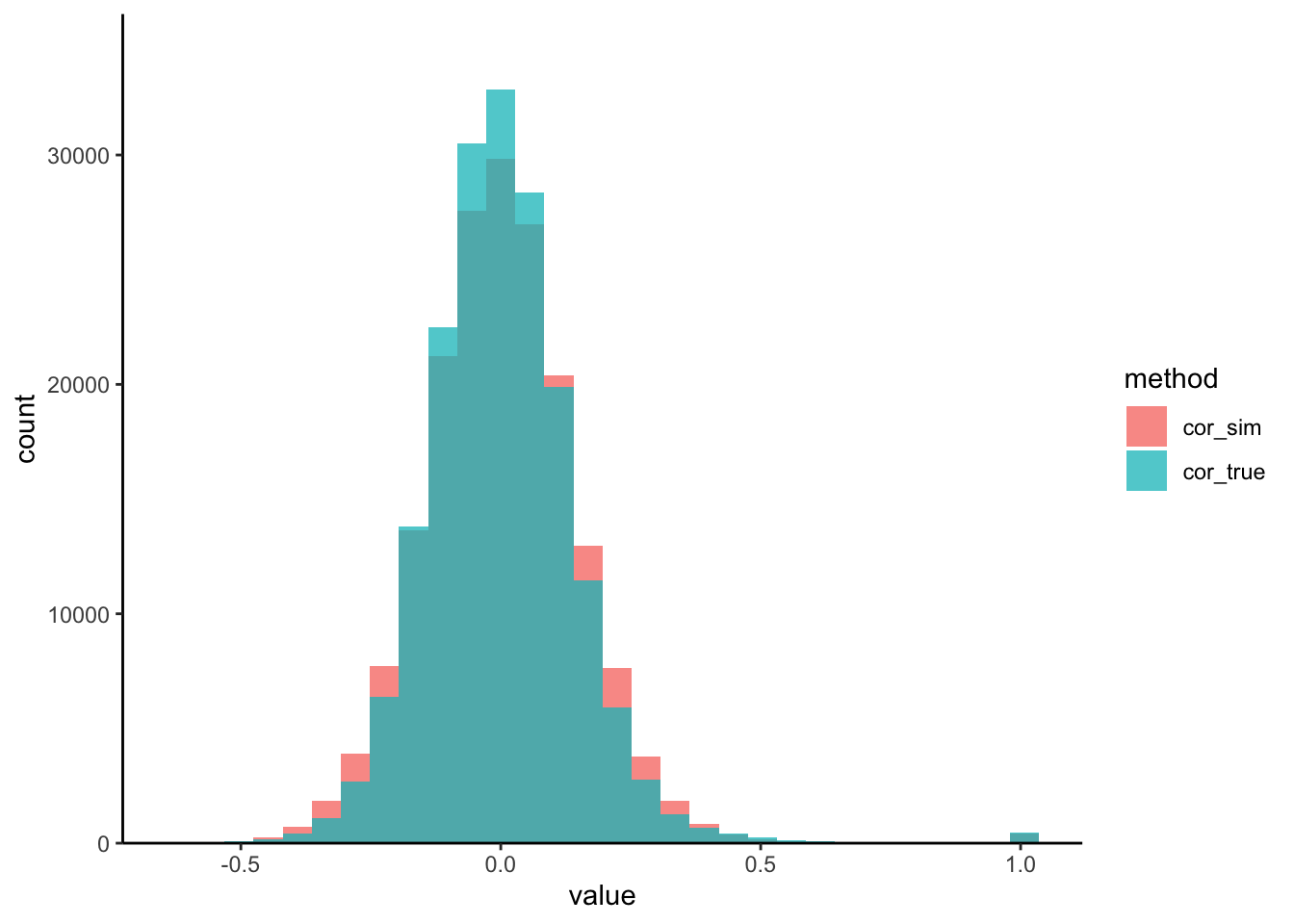

correlation_hist(t1d, sim_exper) #Solution: For our copula, we can use a covariance estimator of Cai and Liu

(2011), that is

suited for high dimensions. Larger values of thr will increase the stability

of our estimates, but at the cost of potentially missing or weakening true

correlations. In line with this point, our new simulated correlations are more

concentrated.

simulator <- setup_simulator(

t1d,

link_formula = ~outcome2,

family = ~ GaussianLSS(),

copula_def = copula_adaptive(thr = 0.1)

) |>

estimate(mstop = 100)

sim_exper <- sample(simulator)

correlation_hist(t1d, sim_exper) :::

:::

contrast_boxplot(t1d, sim_exper, ~. ~ reorder(outcome2, value))

rhos <- list(sim = cor(t(assay(sim_exper))), real = cor(t(assay(t1d))))

diag(rhos[[1]]) <- NA

top_cors <- which(abs(rhos[[1]]) > quantile(abs(rhos[[1]]), 0.999, na.rm = TRUE), arr.ind = TRUE)

# generate data for the pairwise scatterplots

pairs_data <- list(

real = subset_correlated(assay(t1d), top_cors),

simulated = subset_correlated(assay(sim_exper), top_cors)

) |>

bind_rows(.id = "source")

keep_pairs <- pairs_data |>

dplyr::select(source, pair, correlation) |>

unique() |>

filter(source == "real", correlation > 0.72, correlation < 0.8) |>

pull(pair)

# visualize the pairwise scatterplots

p1 <- pairs_data |>

filter(pair %in% keep_pairs) |>

ggplot() +

geom_point(aes(feature1, feature2, col = source), alpha = 0.5, size = 1) +

scale_color_manual(values = c("#038C8C", "#D93636")) +

guides(color = guide_legend(override.aes = list(alpha = 1, size = 2))) +

labs(title = "A", col = "Source", x = expression("Feature" ~ j), y = expression("Feature" ~ j^"'")) +

facet_wrap(~ reorder(pair, -correlation), ncol = 6, scales = "free") +

theme(

strip.text = element_text(size = 7),

axis.text = element_text(size = 8)

)

# create the heatmap

ft_order <- hclust(dist(assay(t1d)))$order

p2 <- correlation_heatmap(rhos$real, ft_order) +

labs(title = "B Real") +

(correlation_heatmap(rhos$sim, ft_order) +

labs(title = "Simulated") +

theme(legend.position = "none"))

(p1 / p2) +

plot_layout(guides = "collect", heights = c(1.2, 2))

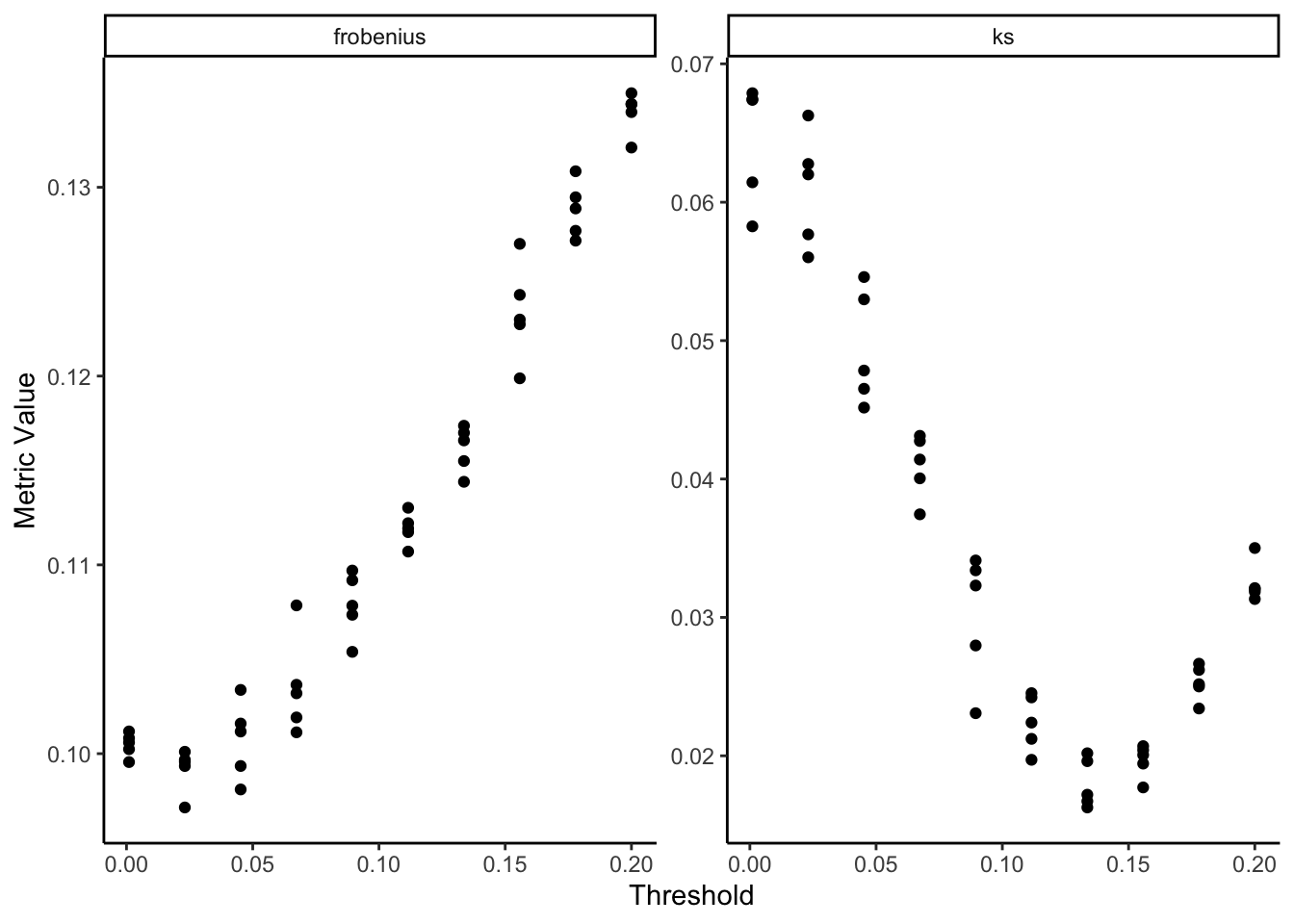

How did we choose this threshold? You can compare the correlations more systematically using this loop:

bivariate_metrics <- \(thr, n_rep = 5) {

# refit the simulator with current threshold

simulator <- setup_simulator(

t1d,

link_formula = ~outcome2,

family = ~ GaussianLSS(),

copula_def = copula_adaptive(thr = thr)

) |>

estimate(mstop = 100)

# compute metrics on five samples

err <- list()

for (i in 1:n_rep) {

sim_exper <- sample(simulator)[rownames(t1d), ]

rhos <- list(sim = cor(t(assay(sim_exper))), real = cor(t(assay(t1d))))

err[[i]] <- data.frame(

frobenius = sqrt(mean((rhos$sim - rhos$real)^2)),

ks = ks.test(rhos$sim, rhos$real)$statistic,

thr = thr

)

}

do.call(rbind, err)

}

errors <- seq(.001, 0.2, length.out = 10) |>

map_dfr(bivariate_metrics) |>

pivot_longer(-thr, names_to = "metric")

ggplot(errors) +

geom_point(aes(thr, value)) +

facet_wrap(~metric, scales = "free_y") +

labs(x = "Threshold", y = "Metric Value")

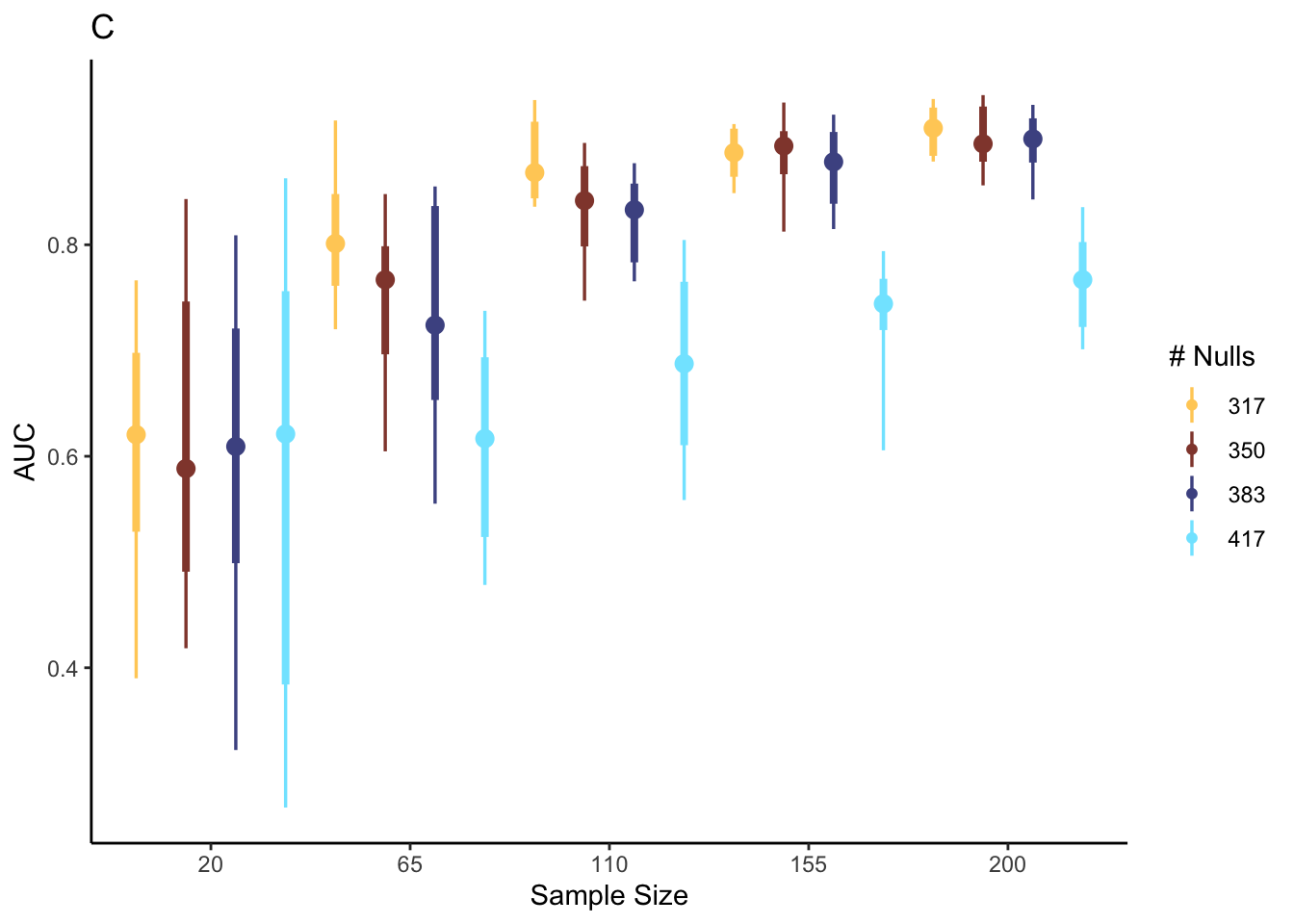

3.4 PLS-DA Power Analysis

Now that we have a simulator, we can run a power analysis. In theory, we could look at how any summary from the PLS-DA output varies as the sample size increases. The most natural one, though, is simply to see how classifier performance improves as we gather more samples. Specifically, we’ll measure the holdout Area Under the Curve (auc), a measure of how well the trains PLS-DA classifier balance precision and recall on new samples.

Moreover, we’ll study the effect of sparsity – what happens when many features

have no relationship at all with the response? We’ll also simulate three

hypothetical datasets for each sample size and sparsity level. All

configurations of interest are stored in the config matrix below.

config <- expand.grid(

sample_size = floor(seq(20, 200, length.out = 5)),

n_rep = 1:20,

n_null = floor(seq(317, 417, length.out = 4)),

metrics = NA

)

data(t1d_order)Exercise: Finally, we’re in a position to generate synthetic data and

evaluate PLS-DA performance. Fill in the block below to update the simulator for

each i. Remember that the original simulator defined above assumes that all

proteins are associated with T1D. You can use t1d_order to prioritize the

proteins with the strongest effects in the original data. As before, you should

only need to modify the line marked with the comments (#).

for (i in seq_len(nrow(config))) {

simulator <- simulator |>

mutate(

# fill this in

) |>

estimate(mstop = 100)

config$metrics[i] <- (sample_n(simulator, config$sample_size[i]) |>

splsda_fit())[["auc"]]

print(glue("run {i}/{nrow(config)}"))

}Solution: To define nulls, we mutate the weakest proteins so that there is no longer any

association with T1D: link = ~ 1 instead of link = ~ outcome2. To speed up

the computation, we organized the mutate calls so that we don’t need to

re-estimate proteins whose effects were removed in a previous iteration.

for (i in seq_len(nrow(config))) {

if (i == 1 || config$n_null[i] != config$n_null[i - 1]) {

simulator <- simulator |>

scDesigner::mutate(any_of(rev(t1d_order)[1:config$n_null[i]]), link = ~1) |>

estimate(mstop = 100)

}

config$metrics[i] <- (sample_n(simulator, config$sample_size[i]) |>

splsda_fit())[["auc"]]

print(glue("run {i}/{nrow(config)}"))

}

config <- readRDS(url("https://uwmadison.box.com/shared/static/tf1xyo7a2n2rd2ms4y9itaev42dsnp5c.rds")):::

We can visualize variation in performance.

ggplot(config, aes(factor(sample_size), metrics, col = factor(n_null))) +

stat_pointinterval(position = "dodge") +

scale_color_scico_d(palette = "managua") +

labs(x = "Sample Size", y = "AUC", color = "# Nulls", title = "C")

Discussion: Interpret the visualization above. How do you think analysis like this could help you justify making some experimental investments over others?

Solution: Reading across facets from top left to bottom right, power decreases when the number of null proteins increases. It seems that sPLS-DA can benefit from having many weakly associated features. While power is sometimes high in low sample sizes, the variance can be quite large. In all settings, there is a noticeable decrease in variance in power as we go from 15 to 48 samples. If we can assume that a moderate fraction (> 15%) of measured proteins are associated with T1D, then we may already achieve good power with ~ 100 samples. However, if we imagine our effect might be sparser in our future experiment, then this figure would give us good justification for arguing for a larger number of samples, in order to ensure we can discover a disease-associated proteomics signature.

ix <- colData(t1d)$outcome2 == "T1D"

p_vals <- apply(assay(t1d), 1, \(x) t.test(x[ix], x[-ix])$p.value)

storey_pi0_est(p_vals, 0.5)$pi0## [1] 1.3114753.5 Comparison with \(t\)-test calculation

What if we had based our power analysis using theory from \(t\)-tests? We might be hopeful that this theory might give a sample size that is also good enough for the sPLS-DA. To make this concrete, suppose that we will test all 427 taxa using a two-sample \(t\)-test with a Benjamini-Hochberg correction for multiple testing. Suppose that we are in the sparse case, with only 10 nonnull taxa. As a hypothetical true signal strength, we use the observed signal strength of the tenth most significant taxon from the real data,

subset_size <- 10

y <- colData(t1d)$outcome2

x <- assay(t1d)[t1d_order[subset_size], ]

test_result <- t.test(x[y == "T1D"], x[y == "healthy"])

signal_strength <- test_result$statistic

signal_strength## t

## -2.092196How many samples would we need in order to achieve 80% power in this setting? Accounting for multiple testing, we would need this taxon to be significant at the level \(\frac{10 \alpha}{427}\), which gives us,

result <- pwr.t.test(power = 0.8, d = signal_strength, sig.level = 0.05 * subset_size / nrow(t1d))

result$n## [1] 10.36249So, according to this power analysis, we only need 11 samples each for the healthy and disease groups. In comparison to our earlier analysis using sPLS-DA, this is very optimistic. In the case where only 10 taxa are significant, then when we had 20 samples total, the AUC was in the range \(\left[\right]\), which is only slightly better than random guessing. This is a case where a \(t\)-test power calculation would make us overoptimistic about the number of samples that are required. Both the data generation (two shifted normal distributions) and analysis (\(t\)-tests with BH correction) mechanisms assumed by this power analysis are suspect, and in this case it makes a difference. All power analysis requires some simplification, but the fact that a more realistic simulator points us towards noticeably larger sample sizes is an indication that we should not trust the \(t\)-test calculation and instead suggest the larger sample sample from our sPLS-DA specific analysis.

## R version 4.4.1 Patched (2024-08-21 r87049)

## Platform: aarch64-apple-darwin20

## Running under: macOS Sonoma 14.5

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## time zone: America/Chicago

## tzcode source: internal

##

## attached base packages:

## [1] splines parallel stats4 stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] lubridate_1.9.3 readr_2.1.5 tidyverse_2.0.0

## [4] doRNG_1.8.6 rngtools_1.5.2 foreach_1.5.2

## [7] ggpubr_0.6.0 ggrepel_0.9.6 edgeR_4.3.16

## [10] limma_3.61.10 ANCOMBC_2.7.0 stringr_1.5.1

## [13] ks_1.14.3 tidySummarizedExperiment_1.15.1 ttservice_0.4.1

## [16] SimBench_0.99.1 wesanderson_0.3.7 mia_1.13.36

## [19] MultiAssayExperiment_1.31.5 TreeSummarizedExperiment_2.13.0 Biostrings_2.73.1

## [22] XVector_0.45.0 SingleCellExperiment_1.27.2 gamlss_5.4-22

## [25] nlme_3.1-166 gamlss.dist_6.1-1 gamlss.data_6.0-6

## [28] scDesigner_0.0.0.9000 purrr_1.0.2 MIGsim_0.0.0.9000

## [31] tidyr_1.3.1 tibble_3.2.1 scico_1.5.0

## [34] pwr_1.3-0 patchwork_1.3.0 mutoss_0.1-13

## [37] mvtnorm_1.3-1 mixOmics_6.29.0 lattice_0.22-6

## [40] MASS_7.3-61 glue_1.8.0 ggplot2_3.5.1

## [43] ggdist_3.3.2 gamboostLSS_2.0-7 mboost_2.9-11

## [46] stabs_0.6-4 forcats_1.0.0 dplyr_1.1.4

## [49] SummarizedExperiment_1.35.1 Biobase_2.65.1 GenomicRanges_1.57.1

## [52] GenomeInfoDb_1.41.1 IRanges_2.39.2 S4Vectors_0.43.2

## [55] BiocGenerics_0.51.1 MatrixGenerics_1.17.0 matrixStats_1.4.1

## [58] SpiecEasi_1.1.3 CovTools_0.5.4

##

## loaded via a namespace (and not attached):

## [1] igraph_2.0.3 ica_1.0-3 plotly_4.10.4

## [4] Formula_1.2-5 scater_1.33.4 zlibbioc_1.51.1

## [7] tidyselect_1.2.1 bit_4.5.0 doParallel_1.0.17

## [10] pspline_1.0-20 blob_1.2.4 S4Arrays_1.5.8

## [13] png_0.1-8 plotrix_3.8-4 cli_3.6.3

## [16] CVXR_1.0-14 pulsar_0.3.11 arrayhelpers_1.1-0

## [19] multtest_2.61.0 goftest_1.2-3 textshaping_0.4.0

## [22] bluster_1.15.1 BiocNeighbors_1.99.1 uwot_0.2.2

## [25] mime_0.12 evaluate_1.0.0 tidytree_0.4.6

## [28] leiden_0.4.3.1 stringi_1.8.4 backports_1.5.0

## [31] lmerTest_3.1-3 Exact_3.3 gsl_2.1-8

## [34] httpuv_1.6.15 AnnotationDbi_1.67.0 magrittr_2.0.3

## [37] mclust_6.1.1 ADGofTest_0.3 pcaPP_2.0-5

## [40] rootSolve_1.8.2.4 sctransform_0.4.1 lpSolve_5.6.21

## [43] ggbeeswarm_0.7.2 DBI_1.2.3 jquerylib_0.1.4

## [46] withr_3.0.1 corpcor_1.6.10 systemfonts_1.1.0

## [49] class_7.3-22 lmtest_0.9-40 compositions_2.0-8

## [52] htmlwidgets_1.6.4 fs_1.6.4 labeling_0.4.3

## [55] SparseArray_1.5.38 DEoptimR_1.1-3 cellranger_1.1.0

## [58] DESeq2_1.45.3 tidybayes_3.0.7 extrafont_0.19

## [61] lmom_3.0 flare_1.7.0.1 reticulate_1.39.0

## [64] minpack.lm_1.2-4 geigen_2.3 zoo_1.8-12

## [67] knitr_1.48 nnls_1.5 UCSC.utils_1.1.0

## [70] svUnit_1.0.6 SHT_0.1.8 timechange_0.3.0

## [73] decontam_1.25.0 fansi_1.0.6 grid_4.4.1

## [76] rhdf5_2.49.0 data.table_1.16.0 vegan_2.6-8

## [79] RSpectra_0.16-2 irlba_2.3.5.1 extrafontdb_1.0

## [82] DescTools_0.99.56 ellipsis_0.3.2 fastDummies_1.7.4

## [85] ade4_1.7-22 lazyeval_0.2.2 yaml_2.3.10

## [88] survival_3.7-0 scattermore_1.2 crayon_1.5.3

## [91] mediation_4.5.0 tensorA_0.36.2.1 phyloseq_1.49.0

## [94] RcppAnnoy_0.0.22 RColorBrewer_1.1-3 progressr_0.14.0

## [97] later_1.3.2 ggridges_0.5.6 codetools_0.2-20

## [100] base64enc_0.1-3 Seurat_5.1.0 KEGGREST_1.45.1

## [103] Rtsne_0.17 shape_1.4.6.1 randtoolbox_2.0.4

## [106] foreign_0.8-87 pkgconfig_2.0.3 xml2_1.3.6

## [109] spatstat.univar_3.0-1 downlit_0.4.4 scatterplot3d_0.3-44

## [112] spatstat.sparse_3.1-0 ape_5.8 viridisLite_0.4.2

## [115] xtable_1.8-4 highr_0.11 car_3.1-2

## [118] fastcluster_1.2.6 plyr_1.8.9 httr_1.4.7

## [121] rbibutils_2.2.16 tools_4.4.1 globals_0.16.3

## [124] SeuratObject_5.0.2 kde1d_1.0.7 beeswarm_0.4.0

## [127] htmlTable_2.4.3 broom_1.0.6 checkmate_2.3.2

## [130] rgl_1.3.1 assertthat_0.2.1 lme4_1.1-35.5

## [133] rngWELL_0.10-9 digest_0.6.37 bayesm_3.1-6

## [136] permute_0.9-7 numDeriv_2016.8-1.1 bookdown_0.40

## [139] Matrix_1.7-0 tzdb_0.4.0 farver_2.1.2

## [142] reshape2_1.4.4 yulab.utils_0.1.7 viridis_0.6.5

## [145] DirichletMultinomial_1.47.0 rpart_4.1.23 cachem_1.1.0

## [148] polyclip_1.10-7 WGCNA_1.73 Hmisc_5.1-3

## [151] generics_0.1.3 dynamicTreeCut_1.63-1 parallelly_1.38.0

## [154] biomformat_1.33.0 statmod_1.5.0 impute_1.79.0

## [157] huge_1.3.5 ragg_1.3.3 RcppHNSW_0.6.0

## [160] ScaledMatrix_1.13.0 carData_3.0-5 minqa_1.2.8

## [163] pbapply_1.7-2 glmnet_4.1-8 spam_2.10-0

## [166] utf8_1.2.4 gtools_3.9.5 shapes_1.2.7

## [169] readxl_1.4.3 preprocessCore_1.67.0 inum_1.0-5

## [172] ggsignif_0.6.4 energy_1.7-12 gridExtra_2.3

## [175] shiny_1.9.1 GenomeInfoDbData_1.2.12 rhdf5filters_1.17.0

## [178] memoise_2.0.1 rmarkdown_2.28 scales_1.3.0

## [181] gld_2.6.6 stabledist_0.7-2 partykit_1.2-22

## [184] svglite_2.1.3 future_1.34.0 RANN_2.6.2

## [187] spatstat.data_3.1-2 rstudioapi_0.16.0 cluster_2.1.6

## [190] spatstat.utils_3.1-0 hms_1.1.3 fitdistrplus_1.2-1

## [193] munsell_0.5.1 cowplot_1.1.3 colorspace_2.1-1

## [196] ellipse_0.5.0 rlang_1.1.4 quadprog_1.5-8

## [199] copula_1.1-4 DelayedMatrixStats_1.27.3 sparseMatrixStats_1.17.2

## [202] dotCall64_1.1-1 scuttle_1.15.4 mgcv_1.9-1

## [205] xfun_0.47 coda_0.19-4.1 e1071_1.7-16

## [208] TH.data_1.1-2 posterior_1.6.0 iterators_1.0.14

## [211] rARPACK_0.11-0 abind_1.4-8 libcoin_1.0-10

## [214] treeio_1.29.1 gmp_0.7-5 Rhdf5lib_1.27.0

## [217] DECIPHER_3.1.4 Rdpack_2.6.1 promises_1.3.0

## [220] RSQLite_2.3.7 sandwich_3.1-1 proxy_0.4-27

## [223] DelayedArray_0.31.11 Rmpfr_0.9-5 GO.db_3.20.0

## [226] compiler_4.4.1 prettyunits_1.2.0 boot_1.3-31

## [229] distributional_0.5.0 beachmat_2.21.6 rbiom_1.0.3

## [232] listenv_0.9.1 Rcpp_1.0.13 Rttf2pt1_1.3.12

## [235] BiocSingular_1.21.4 tensor_1.5 progress_1.2.3

## [238] BiocParallel_1.39.0 insight_0.20.5 spatstat.random_3.3-2

## [241] R6_2.5.1 fastmap_1.2.0 multcomp_1.4-26

## [244] rstatix_0.7.2 vipor_0.4.7 ROCR_1.0-11

## [247] rsvd_1.0.5 nnet_7.3-19 gtable_0.3.5

## [250] KernSmooth_2.23-24 miniUI_0.1.1.1 deldir_2.0-4

## [253] htmltools_0.5.8.1 ggthemes_5.1.0 RcppParallel_5.1.9

## [256] bit64_4.5.2 spatstat.explore_3.3-2 lifecycle_1.0.4

## [259] nloptr_2.1.1 sass_0.4.9 vctrs_0.6.5

## [262] robustbase_0.99-4 VGAM_1.1-12 slam_0.1-53

## [265] spatstat.geom_3.3-3 sp_2.1-4 future.apply_1.11.2

## [268] pracma_2.4.4 rvinecopulib_0.6.3.1.1 bslib_0.8.0

## [271] pillar_1.9.0 locfit_1.5-9.10 jsonlite_1.8.9

## [274] expm_1.0-0