5 Batch Effect Correction

Integration can be a subtle exercise. We need to balance our interest in seeing

similarities between datasets with the risk of making things seem more similar

than they really are. Simulation can help navigate this subtlety by letting us

see how integration methods would behave in situations where we know exactly how

the different datasets are related. This note will illustrate this perspective

by showing how simulation can help with both horizontal (across batches) and

vertical (across assays) integration. We’ll have a brief interlude on the map

function in the purrr, which is helpful for concisely writing code that would

otherwise need for loops (e.g., over batches or assays).

5.1 Anaerobic Digestion Data

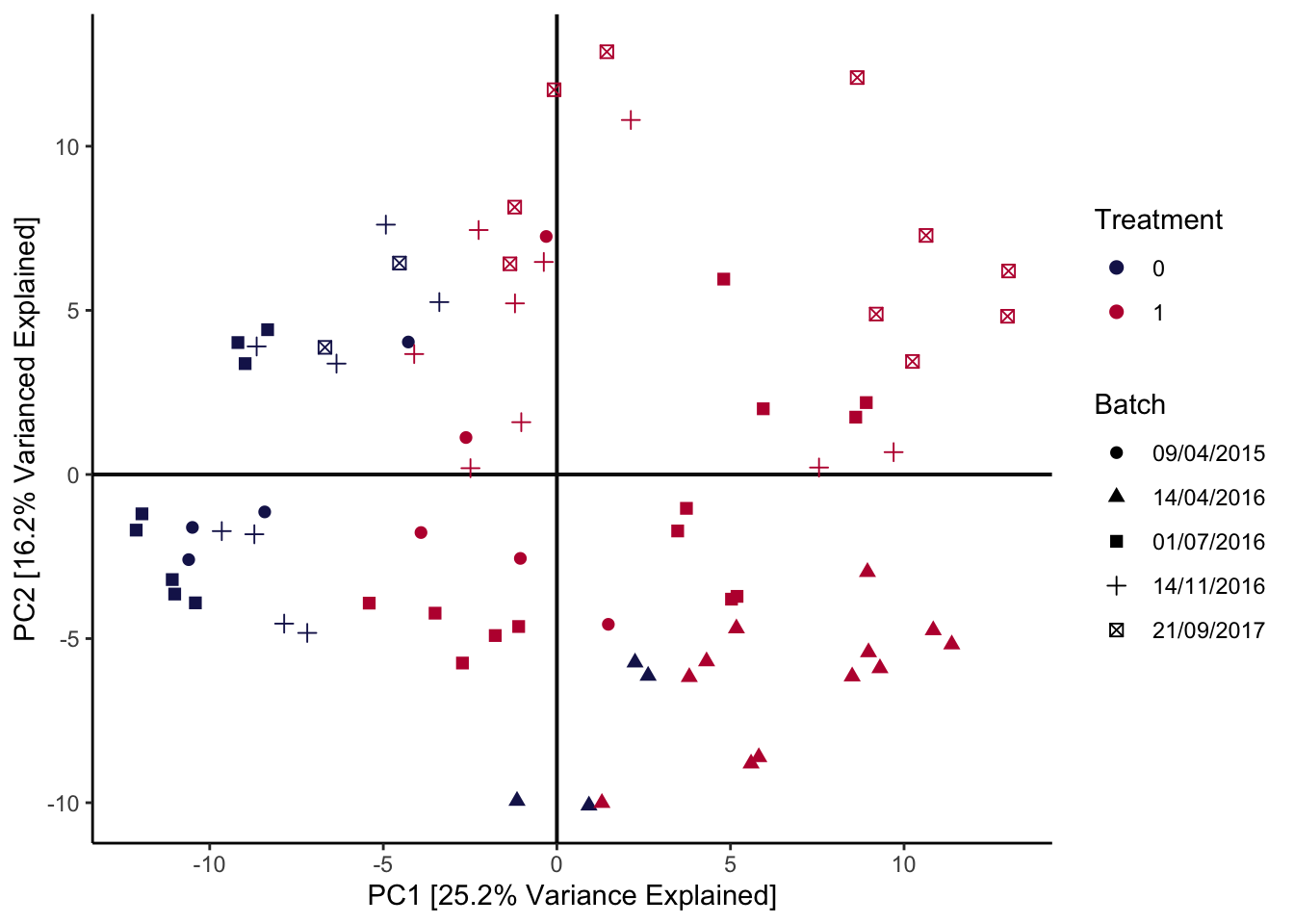

This example is about simultaneously analyzing several batches in a dataset about the efficiency of anaerobic digestion (AD) of organic matter. The essential problem is that, in this study, the samples could not be collected simultaneously. Small differences across separate runs could lead to systematic differences in the resulting data, which can obfuscate the more interesting between-group variation that the experiment was intended to uncover. For example, in the AD dataset, the date of the sequencing run has a global effect on measured community composition, which we can see right away from a principal components plot:

data(anaerobic)

pca_batch(anaerobic, facet = FALSE) +

scale_color_manual(values = c("#191C59", "#bc0c3c")) +

labs(

col = "Treatment",

shape = "Batch",

x = "PC1 [25.2% Variance Explained]",

y = "PC2 [16.2% Varianced Explained]"

)

You can learn more about the general microbiome batch effect integration problem in (Wang and Le Cao, 2020), which is where this dataset example and the batch effect correction code below comes from. The article also reviews mechanisms that could lead to batch effects in microbiome data, together with methods for removing these effects and the situations within which they are most appropriate.

In batch effect correction, it’s important to remove as much of the batch variation as possible without accidentally also removing the real biological variation that would have been present even if all the samples had been sequenced together. This is sometimes called ``overintegration,’’ and this is an especially high risk if some of the real biological variation is quite subtle, e.g., a rare cell type or one that is very similar to a more prominent one. Simulation can help us gauge the extent to which different methods may or may not overintegrate. Since we get to control the between-batch and and between-biological-condition differences, we can see the extent to which integration methods can remove the former while preserving the latter.

5.2 Simulation Design

The block below estimates a candidate simulator. By using the formula ~ batch + treatment, we’re allowing for taxon-wise differences due to batch and

treatment. Note that in principle, we could estimate an interaction between

batch and treatment (the treatment could appear stronger in some batches than

others). I encourage you to try estimating that model; however, visually

analyzing the output suggests that this full model has a tendancy to overfit.

Since the data have already been centered log-ratio transformed, we can try out

a Gaussian marginal model. The AD dataset has relatively few samples

compared to the number of features, so we’ll use a copula that’s designed for

this setting.

simulator <- setup_simulator(

anaerobic,

~ batch + treatment,

~ GaussianLSS(),

copula = copula_adaptive(thr = .1)

) |>

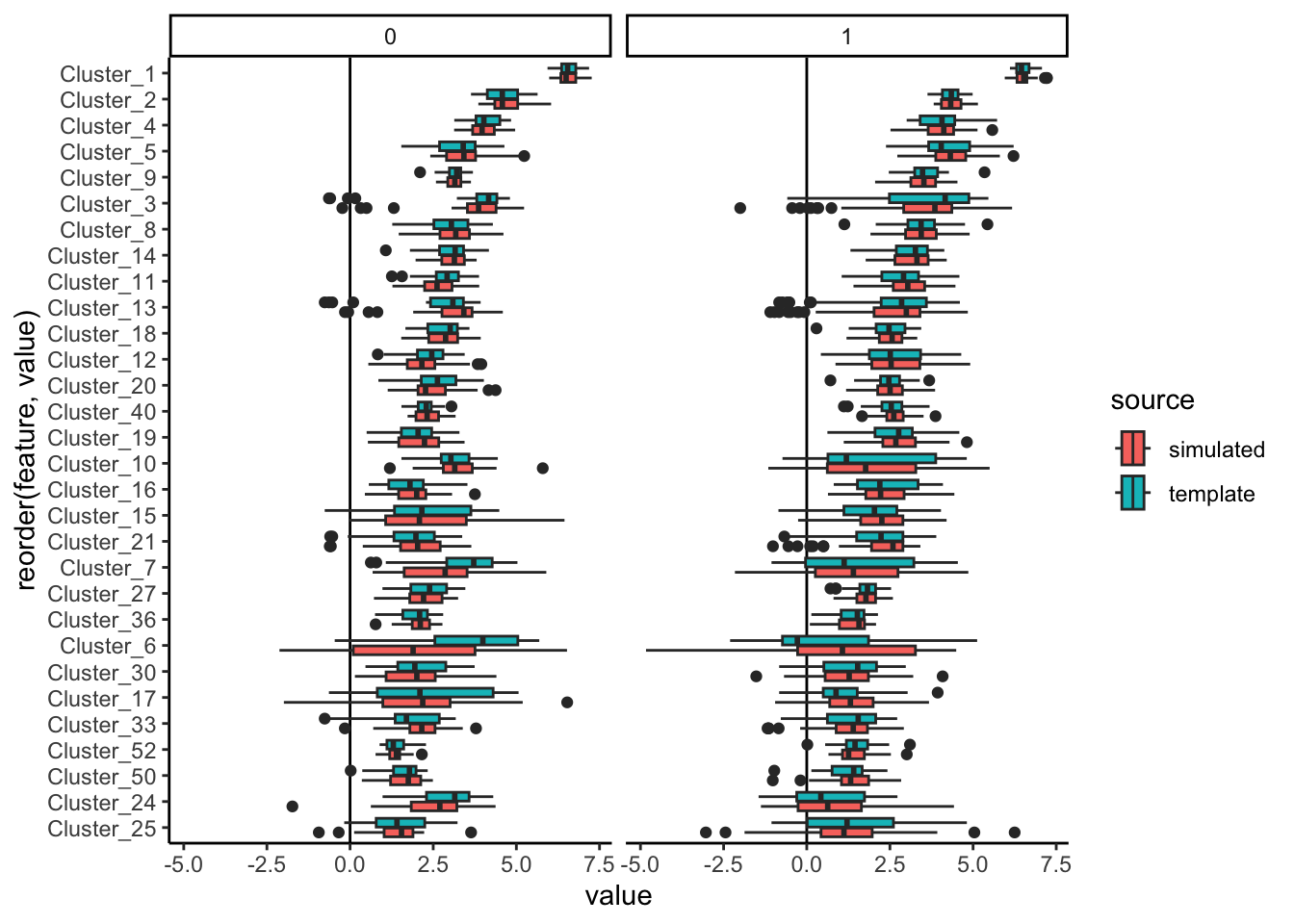

estimate(nu = 0.05, mstop = 100) # lower nu -> stable trainingWe can simulate from the fitted model and evaluate the quality of our fit using

contrast_boxplot. This is a light wrapper of the ggplot2 code we used to

compare experiments from our first session, and you can read its definition

here.

anaerobic_sim <- sample(simulator)

contrast_boxplot(anaerobic, anaerobic_sim)

Exercise Propose and create at least one other visualization that can be used to compare and contrast the simulator with real data. What conclusions can you draw?

Solution: There are many possible answers:

- Boxplots across taxa with different overall abundance levels.

- Analogous histograms or CDF plots, to show the entire distributions, rather than just summarized quantiles.

- Pair scatterplots, to see how well the bivariate relationships between taxa are preserved.

- Dimensionality reduction on the simulated data, to see how well it matches global structure in the original data.

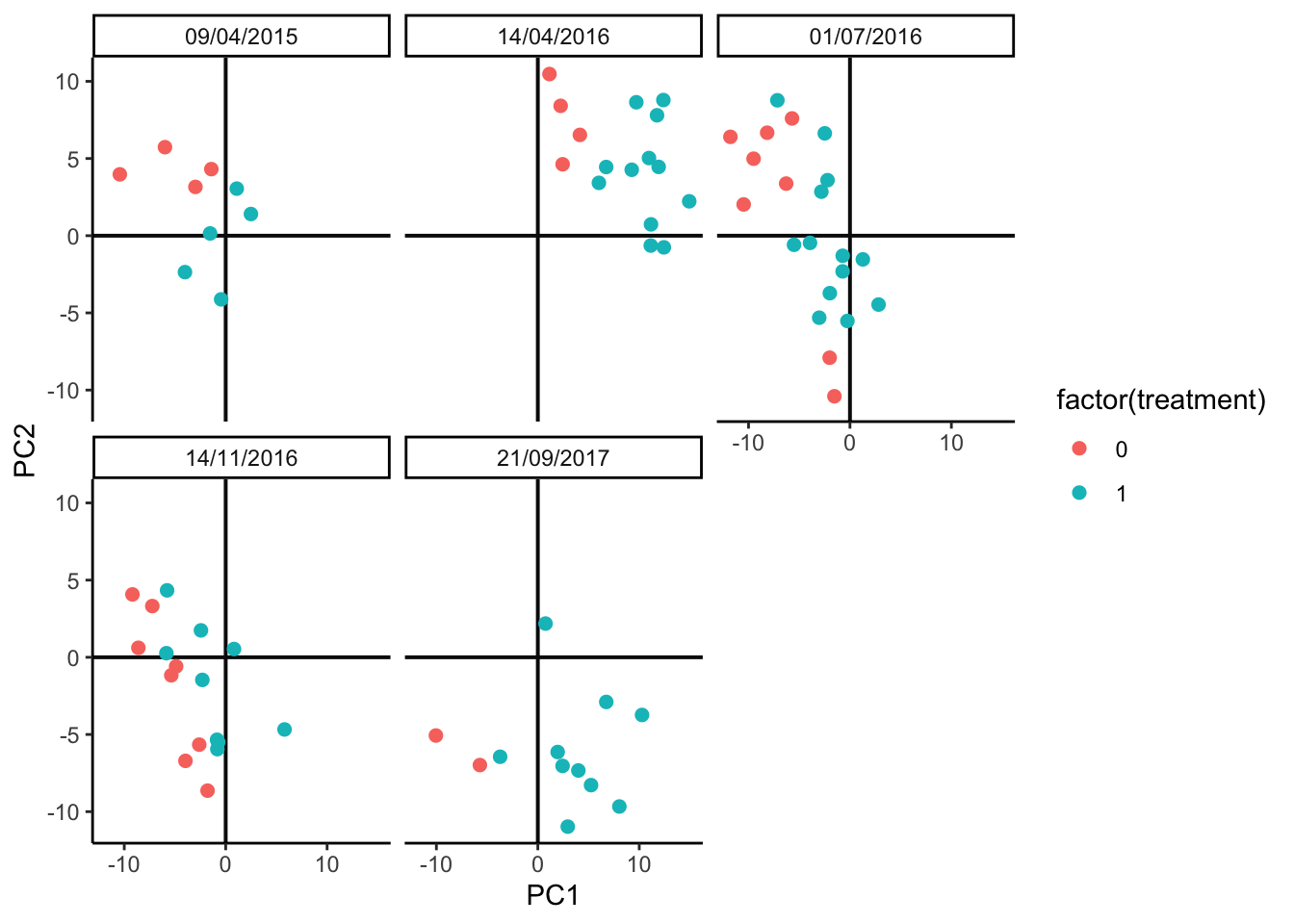

We’ll implement the last idea using PCA. This should be contrasted with the PCA plot on the original data above. It’s okay if the plot seems rotated relative to the oiginal plot – PCA is only unique up to rotation. The main characteristic we’re looking for is that the relative sizes of the batch and treatment effects seem reasonaly well-preserved, since these will be the types of effects that our later batch effect integration methods must be able to distinguish.

pca_batch(anaerobic_sim)

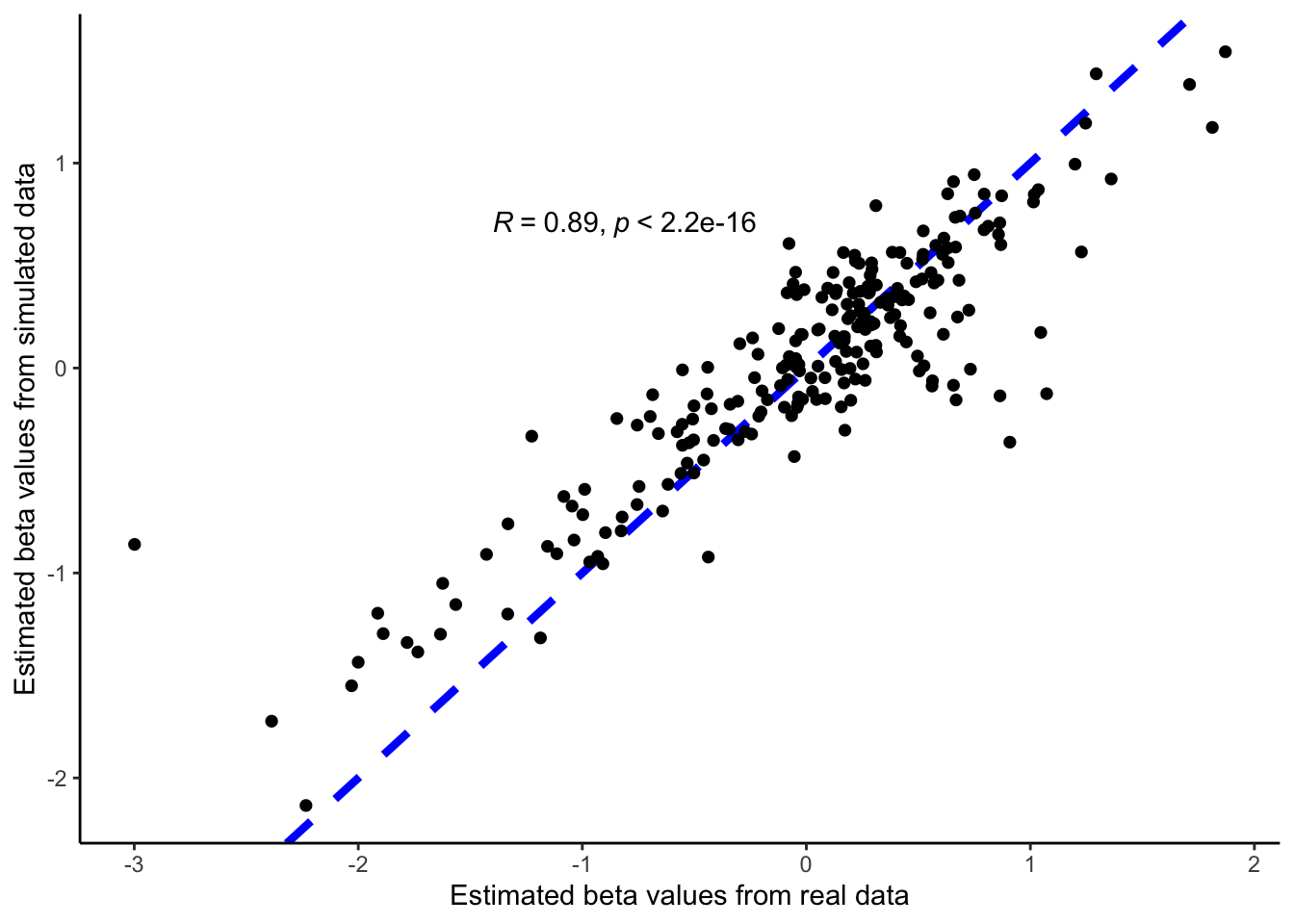

In addition to visual evaluation, we can also perform a narrow evaluation using the parameter estimations from RUV. The idea here is that the parameter estimation from RUV should be similar for simulation data and real data. In the RUV model, we estimate the treatment effect for each feature in simulation and real data sets and are plotted in the scatter plot along with the correlation value between the two. As seen in the figure there is good agreement with the parameter estimations from the two datasets with a correlation value of 0.66.

fit_sim <- ruv::RUV4(t(assay(anaerobic_sim)), colData(anaerobic_sim)$treatment, k = 0)

fit_org <- ruv::RUV4(t(assay(anaerobic)), colData(anaerobic)$treatment, k = 0)

bind_cols(org = c(fit_org$betahat), sim = c(fit_sim$betahat)) |>

ggplot(aes(org, sim)) +

geom_abline(color = "blue", lwd = 1.5, lty = 2) +

geom_point(size = 2, shape = 16) +

theme_classic() +

ylab("Estimated beta values from simulated data") +

xlab("Estimated beta values from real data") +

stat_cor(method = "pearson", label.x = -1.4, label.y = 0.7) :::

:::To study the risk for overintegration, let’s imagine that there were a third

treatment group with relatively fewer samples. This is the type of group that a

correction method might accidentally blend in with the rest, if it’s too

aggressive. We’ve defined the imaginary experiment using the data.frame below.

The treatment level 1.8 is the new one. We’ve supposed there are between 1 -

3 technical replicates (extraction) for each biological sample (sample), and

the batch dates are the same as before.

## extraction batch treatment rep sample

## Min. :1 09/04/2015:36 Min. :0.0000 Min. :1.00 1 : 2

## 1st Qu.:1 14/04/2016:36 1st Qu.:0.0000 1st Qu.:1.75 2 : 2

## Median :2 01/07/2016:36 Median :1.0000 Median :2.50 3 : 2

## Mean :2 14/11/2016:36 Mean :0.7167 Mean :2.75 4 : 2

## 3rd Qu.:3 21/09/2017:36 3rd Qu.:1.0000 3rd Qu.:4.00 5 : 2

## Max. :3 Max. :1.8000 Max. :5.00 6 : 2

## (Other):168We can simulate from the new design and look at how different this new treatment group seems from the others. It’s a subtle effect, definitely smaller than the batch effect, but also separate enough that we should be able to preserve it.

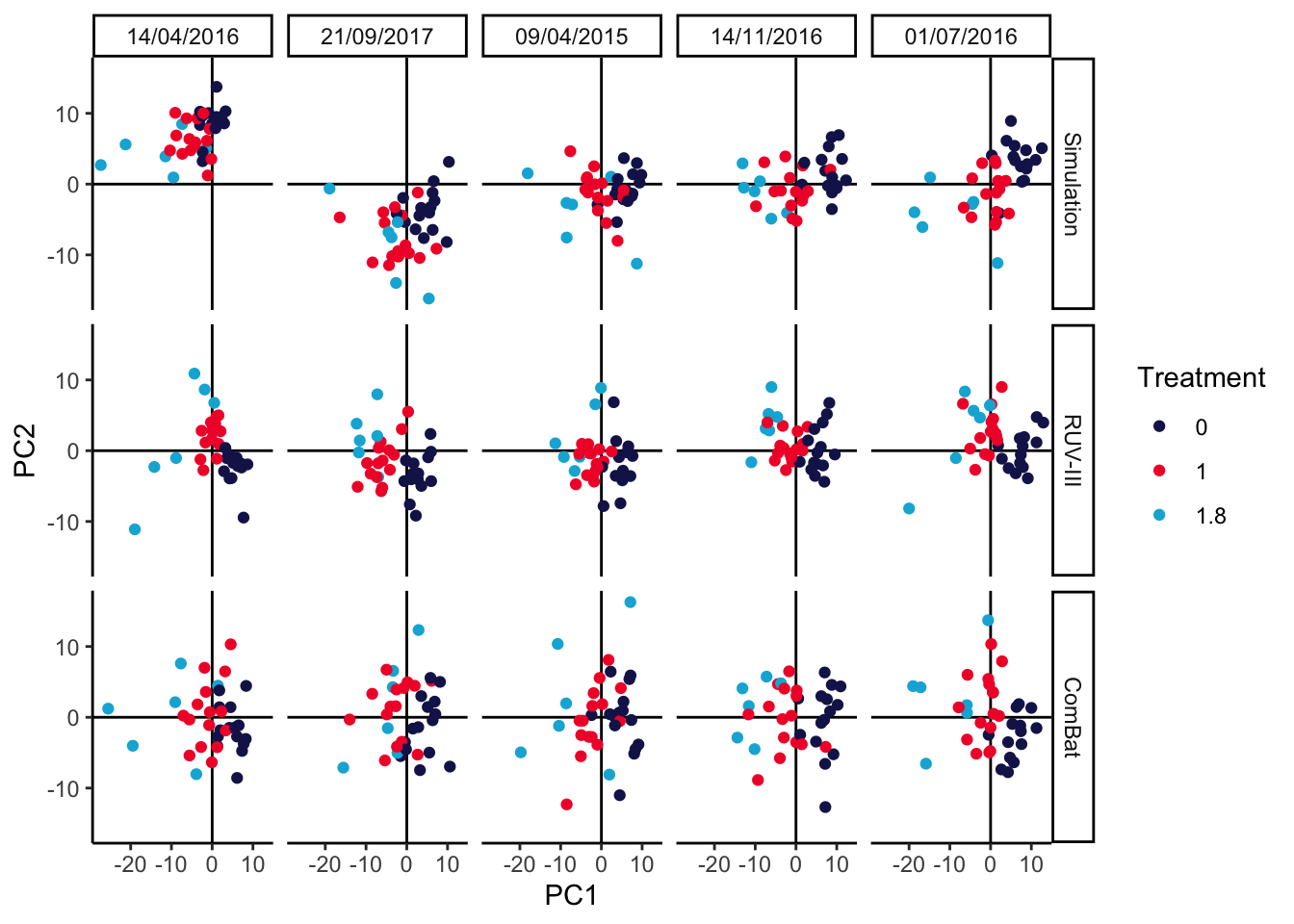

5.3 Benchmarking

We’ve defined a batch_correct wrapper function that implements either the

RUV-III or ComBat batch effect correction methods. Their outputs are contrasted

in the PCAs below. It looks like ComBat might be somewhat too aggressive,

causing the 1 and 1.8 treatment groups to substantially overlap, while RUV

is a bit more conservative, keeping the treatment groups nicely separate. As an

aside, we note that this conclusion can depend on the number of replicates and

total number of samples available. We’ve included the code for generating the

imaginary_design data.frame in a vignette

for the MIGsim package. Can you find settings that lead either method astray?

p[["ruv"]] <- pca_batch(batch_correct(anaerobic_sim, "ruv"))

p[["combat"]] <- pca_batch(batch_correct(anaerobic_sim, "combat"))

prediction_errors <- function(df) {

y_hat <- predict(lda(treatment ~ PC1 + PC2, data = df), type = "response")$class

table(df$treatment, y_hat)

}

map(p, ~ prediction_errors(.$data))## $sim

## y_hat

## 0 1 1.8

## 0 62 13 0

## 1 15 56 4

## 1.8 1 14 15

##

## $ruv

## y_hat

## 0 1 1.8

## 0 70 5 0

## 1 2 67 6

## 1.8 0 8 22

##

## $combat

## y_hat

## 0 1 1.8

## 0 65 10 0

## 1 12 59 4

## 1.8 1 12 17## R version 4.4.1 Patched (2024-08-21 r87049)

## Platform: aarch64-apple-darwin20

## Running under: macOS Sonoma 14.5

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.4-arm64/Resources/lib/libRlapack.dylib; LAPACK version 3.12.0

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## time zone: America/Chicago

## tzcode source: internal

##

## attached base packages:

## [1] splines parallel stats4 stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] lubridate_1.9.3 readr_2.1.5 tidyverse_2.0.0 doRNG_1.8.6 rngtools_1.5.2

## [6] foreach_1.5.2 ggpubr_0.6.0 ggrepel_0.9.6 edgeR_4.3.16 limma_3.61.10

## [11] ANCOMBC_2.7.0 stringr_1.5.1 ks_1.14.3 tidySummarizedExperiment_1.15.1 ttservice_0.4.1

## [16] SimBench_0.99.1 wesanderson_0.3.7 mia_1.13.36 MultiAssayExperiment_1.31.5 TreeSummarizedExperiment_2.13.0

## [21] Biostrings_2.73.1 XVector_0.45.0 SingleCellExperiment_1.27.2 gamlss_5.4-22 nlme_3.1-166

## [26] gamlss.dist_6.1-1 gamlss.data_6.0-6 scDesigner_0.0.0.9000 purrr_1.0.2 MIGsim_0.0.0.9000

## [31] tidyr_1.3.1 tibble_3.2.1 scico_1.5.0 pwr_1.3-0 patchwork_1.3.0

## [36] mutoss_0.1-13 mvtnorm_1.3-1 mixOmics_6.29.0 lattice_0.22-6 MASS_7.3-61

## [41] glue_1.8.0 ggplot2_3.5.1 ggdist_3.3.2 gamboostLSS_2.0-7 mboost_2.9-11

## [46] stabs_0.6-4 forcats_1.0.0 dplyr_1.1.4 SummarizedExperiment_1.35.1 Biobase_2.65.1

## [51] GenomicRanges_1.57.1 GenomeInfoDb_1.41.1 IRanges_2.39.2 S4Vectors_0.43.2 BiocGenerics_0.51.1

## [56] MatrixGenerics_1.17.0 matrixStats_1.4.1 SpiecEasi_1.1.3 CovTools_0.5.4

##

## loaded via a namespace (and not attached):

## [1] igraph_2.0.3 ica_1.0-3 plotly_4.10.4 Formula_1.2-5 scater_1.33.4 zlibbioc_1.51.1

## [7] tidyselect_1.2.1 bit_4.5.0 doParallel_1.0.17 pspline_1.0-20 blob_1.2.4 S4Arrays_1.5.8

## [13] png_0.1-8 plotrix_3.8-4 cli_3.6.3 CVXR_1.0-14 pulsar_0.3.11 arrayhelpers_1.1-0

## [19] multtest_2.61.0 goftest_1.2-3 textshaping_0.4.0 bluster_1.15.1 BiocNeighbors_1.99.1 uwot_0.2.2

## [25] mime_0.12 evaluate_1.0.0 tidytree_0.4.6 leiden_0.4.3.1 stringi_1.8.4 backports_1.5.0

## [31] XML_3.99-0.17 lmerTest_3.1-3 Exact_3.3 gsl_2.1-8 httpuv_1.6.15 AnnotationDbi_1.67.0

## [37] magrittr_2.0.3 mclust_6.1.1 ADGofTest_0.3 pcaPP_2.0-5 rootSolve_1.8.2.4 sctransform_0.4.1

## [43] lpSolve_5.6.21 ggbeeswarm_0.7.2 DBI_1.2.3 genefilter_1.87.0 jquerylib_0.1.4 withr_3.0.1

## [49] corpcor_1.6.10 systemfonts_1.1.0 class_7.3-22 lmtest_0.9-40 sva_3.53.0 compositions_2.0-8

## [55] htmlwidgets_1.6.4 fs_1.6.4 labeling_0.4.3 SparseArray_1.5.38 DEoptimR_1.1-3 cellranger_1.1.0

## [61] DESeq2_1.45.3 tidybayes_3.0.7 extrafont_0.19 annotate_1.83.0 lmom_3.0 flare_1.7.0.1

## [67] reticulate_1.39.0 minpack.lm_1.2-4 geigen_2.3 zoo_1.8-12 knitr_1.48 nnls_1.5

## [73] UCSC.utils_1.1.0 svUnit_1.0.6 SHT_0.1.8 timechange_0.3.0 decontam_1.25.0 fansi_1.0.6

## [79] grid_4.4.1 rhdf5_2.49.0 data.table_1.16.0 ruv_0.9.7.1 vegan_2.6-8 RSpectra_0.16-2

## [85] irlba_2.3.5.1 extrafontdb_1.0 DescTools_0.99.56 ellipsis_0.3.2 fastDummies_1.7.4 ade4_1.7-22

## [91] lazyeval_0.2.2 yaml_2.3.10 survival_3.7-0 scattermore_1.2 crayon_1.5.3 mediation_4.5.0

## [97] tensorA_0.36.2.1 phyloseq_1.49.0 RcppAnnoy_0.0.22 RColorBrewer_1.1-3 progressr_0.14.0 later_1.3.2

## [103] ggridges_0.5.6 codetools_0.2-20 base64enc_0.1-3 Seurat_5.1.0 KEGGREST_1.45.1 Rtsne_0.17

## [109] shape_1.4.6.1 randtoolbox_2.0.4 foreign_0.8-87 pkgconfig_2.0.3 xml2_1.3.6 spatstat.univar_3.0-1

## [115] downlit_0.4.4 scatterplot3d_0.3-44 spatstat.sparse_3.1-0 ape_5.8 viridisLite_0.4.2 xtable_1.8-4

## [121] highr_0.11 car_3.1-2 fastcluster_1.2.6 plyr_1.8.9 httr_1.4.7 rbibutils_2.2.16

## [127] tools_4.4.1 globals_0.16.3 SeuratObject_5.0.2 kde1d_1.0.7 beeswarm_0.4.0 htmlTable_2.4.3

## [133] broom_1.0.6 checkmate_2.3.2 rgl_1.3.1 assertthat_0.2.1 lme4_1.1-35.5 rngWELL_0.10-9

## [139] digest_0.6.37 bayesm_3.1-6 permute_0.9-7 numDeriv_2016.8-1.1 bookdown_0.40 Matrix_1.7-0

## [145] tzdb_0.4.0 farver_2.1.2 reshape2_1.4.4 yulab.utils_0.1.7 viridis_0.6.5 DirichletMultinomial_1.47.0

## [151] rpart_4.1.23 cachem_1.1.0 polyclip_1.10-7 WGCNA_1.73 Hmisc_5.1-3 generics_0.1.3

## [157] dynamicTreeCut_1.63-1 parallelly_1.38.0 biomformat_1.33.0 statmod_1.5.0 impute_1.79.0 huge_1.3.5

## [163] ragg_1.3.3 RcppHNSW_0.6.0 ScaledMatrix_1.13.0 carData_3.0-5 minqa_1.2.8 pbapply_1.7-2

## [169] glmnet_4.1-8 spam_2.10-0 utf8_1.2.4 gtools_3.9.5 shapes_1.2.7 readxl_1.4.3

## [175] preprocessCore_1.67.0 inum_1.0-5 ggsignif_0.6.4 energy_1.7-12 gridExtra_2.3 shiny_1.9.1

## [181] GenomeInfoDbData_1.2.12 rhdf5filters_1.17.0 memoise_2.0.1 rmarkdown_2.28 scales_1.3.0 gld_2.6.6

## [187] stabledist_0.7-2 partykit_1.2-22 svglite_2.1.3 future_1.34.0 RANN_2.6.2 spatstat.data_3.1-2

## [193] rstudioapi_0.16.0 cluster_2.1.6 spatstat.utils_3.1-0 hms_1.1.3 fitdistrplus_1.2-1 munsell_0.5.1

## [199] cowplot_1.1.3 colorspace_2.1-1 ellipse_0.5.0 rlang_1.1.4 quadprog_1.5-8 copula_1.1-4

## [205] DelayedMatrixStats_1.27.3 sparseMatrixStats_1.17.2 dotCall64_1.1-1 scuttle_1.15.4 mgcv_1.9-1 xfun_0.47

## [211] coda_0.19-4.1 e1071_1.7-16 TH.data_1.1-2 posterior_1.6.0 iterators_1.0.14 rARPACK_0.11-0

## [217] abind_1.4-8 libcoin_1.0-10 treeio_1.29.1 gmp_0.7-5 Rhdf5lib_1.27.0 DECIPHER_3.1.4

## [223] Rdpack_2.6.1 promises_1.3.0 RSQLite_2.3.7 sandwich_3.1-1 proxy_0.4-27 DelayedArray_0.31.11

## [229] Rmpfr_0.9-5 GO.db_3.20.0 compiler_4.4.1 prettyunits_1.2.0 boot_1.3-31 distributional_0.5.0

## [235] beachmat_2.21.6 rbiom_1.0.3 listenv_0.9.1 Rcpp_1.0.13 Rttf2pt1_1.3.12 BiocSingular_1.21.4

## [241] tensor_1.5 progress_1.2.3 BiocParallel_1.39.0 insight_0.20.5 spatstat.random_3.3-2 R6_2.5.1

## [247] fastmap_1.2.0 multcomp_1.4-26 rstatix_0.7.2 vipor_0.4.7 ROCR_1.0-11 rsvd_1.0.5

## [253] nnet_7.3-19 gtable_0.3.5 KernSmooth_2.23-24 miniUI_0.1.1.1 deldir_2.0-4 htmltools_0.5.8.1

## [259] ggthemes_5.1.0 RcppParallel_5.1.9 bit64_4.5.2 spatstat.explore_3.3-2 lifecycle_1.0.4 nloptr_2.1.1

## [265] sass_0.4.9 vctrs_0.6.5 robustbase_0.99-4 VGAM_1.1-12 slam_0.1-53 spatstat.geom_3.3-3

## [271] sp_2.1-4 future.apply_1.11.2 pracma_2.4.4 rvinecopulib_0.6.3.1.1 bslib_0.8.0 pillar_1.9.0

## [277] locfit_1.5-9.10 jsonlite_1.8.9 expm_1.0-0